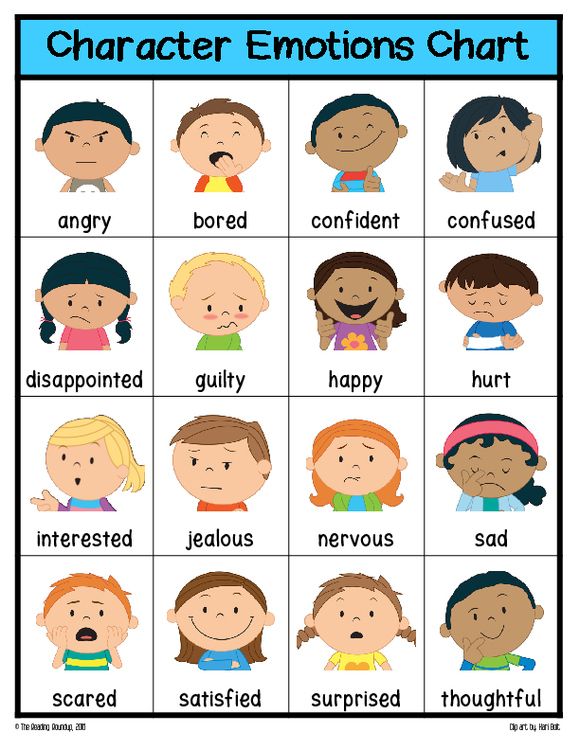

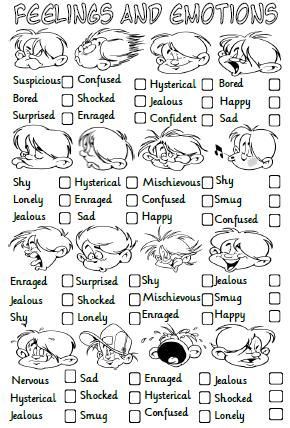

Recognizing emotions activity

10 Activities for Teaching Young Children About Emotions

Having the vocabulary to talk about emotions is an important part of healthy social-emotional development—and as all of us continue to navigate the challenges of the COVID-19 era together, helping kids talk about their feelings is more important than ever.

In today’s post, we’re sharing a few simple games and activities you can use to teach young children about emotions: how to recognize and name them, how to talk about them, and how to pick up on the feelings of others. Adapted from some new and classic Brookes resources on social-emotional development, these activities are ideal for use in early childhood programs (and parents can easily adapt them for home, too!).

What’s your favorite way to get young children talking about emotions? Add your idea in the comments below!

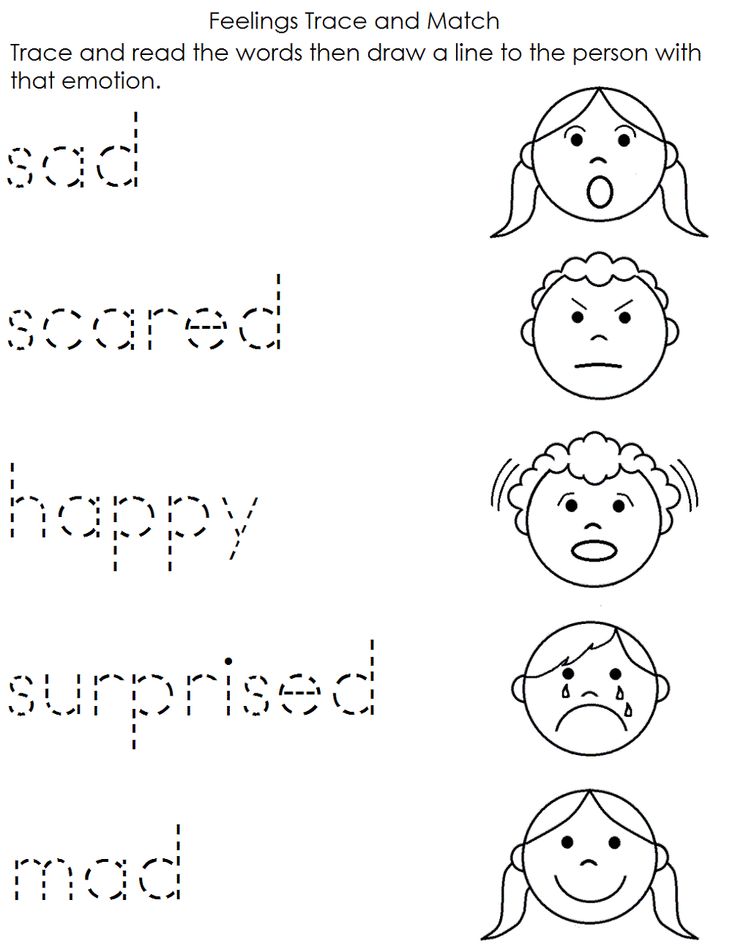

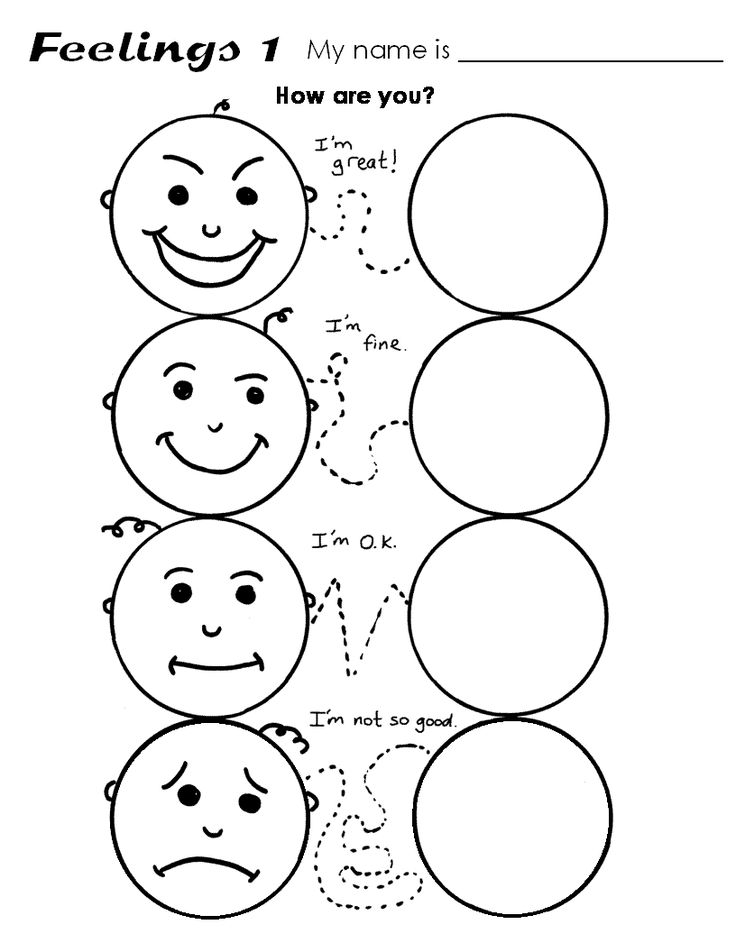

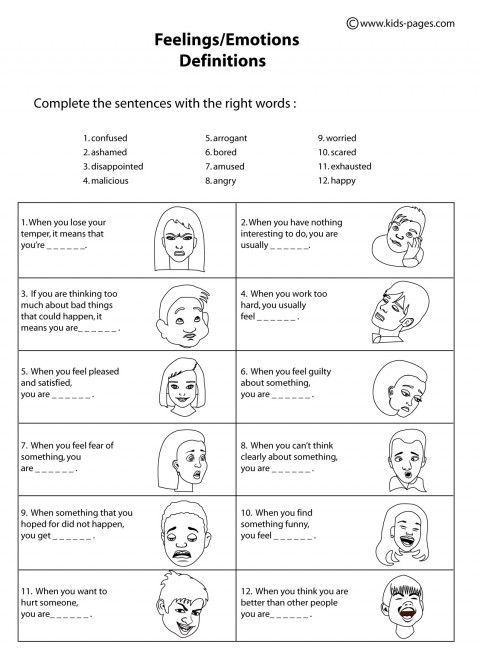

Feelings ID

This activity is a great starting point for teaching young children about emotions. Here’s what to do:

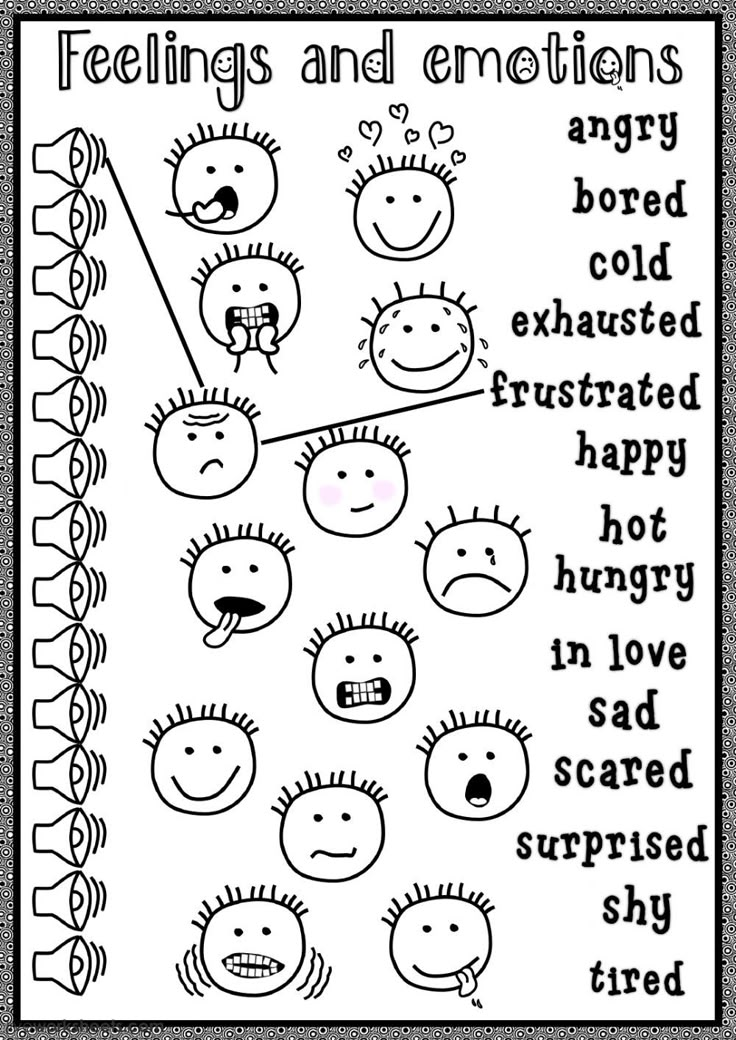

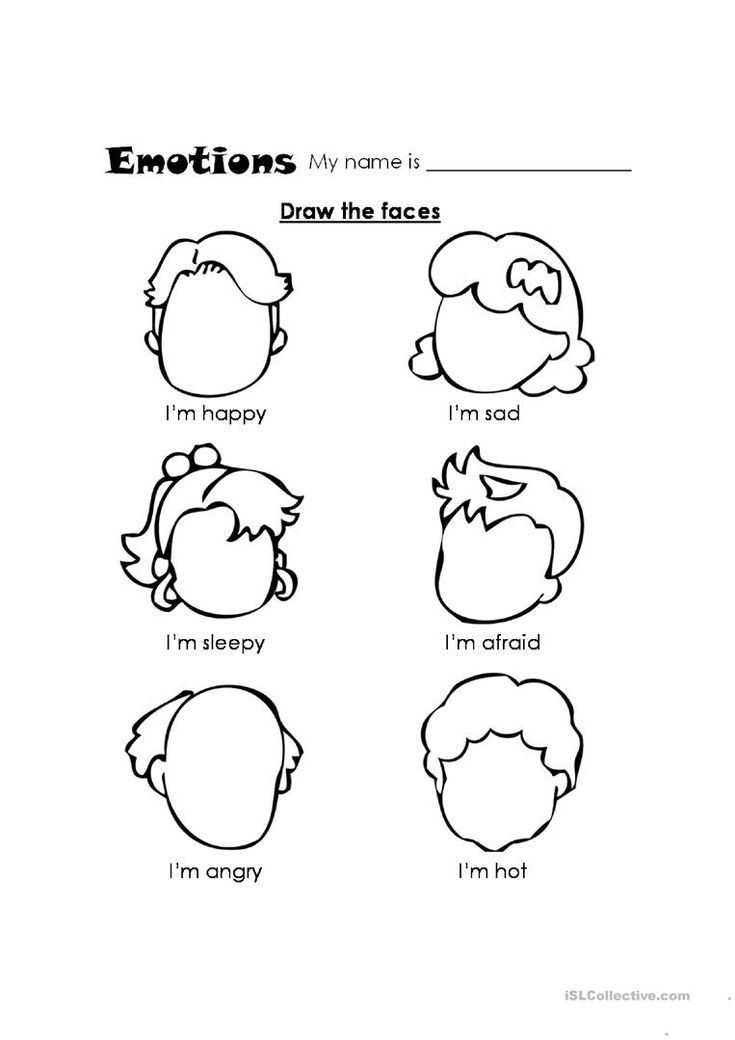

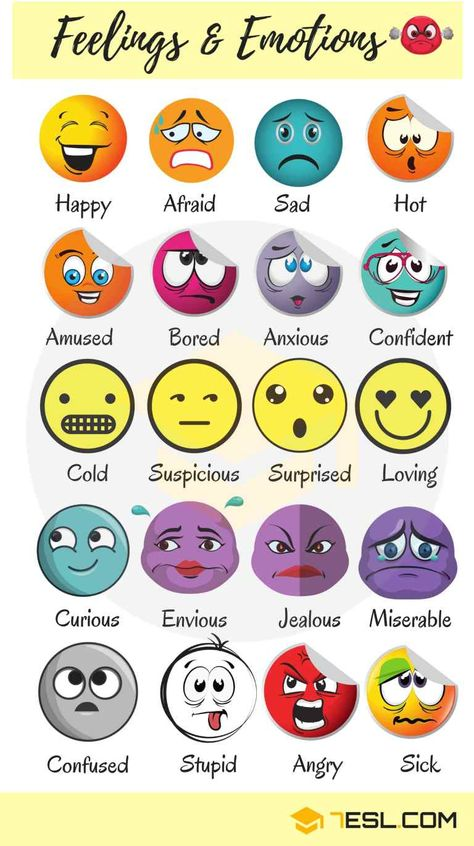

- Generate a list of feelings. Start with a basic feeling, such as happy or sad, and explain that this is a feeling. Give a second example, using a more complex feeling such as excited or surprised. Ask students to generate other feelings, add them to the list, and display the list for students on chart paper or with a projector.

- Identify feelings as good or not so good. Go back to the start of your feelings list, and have the students give you a thumbs-up for feelings that make people feel good on the inside and a thumbs-down for feelings that make people feel not so good on the inside.

- Conduct a follow-up discussion. Ask students if they have ever had any feelings where it was hard to decide if the feeling made them feel good or not so good on the inside. Give an example.

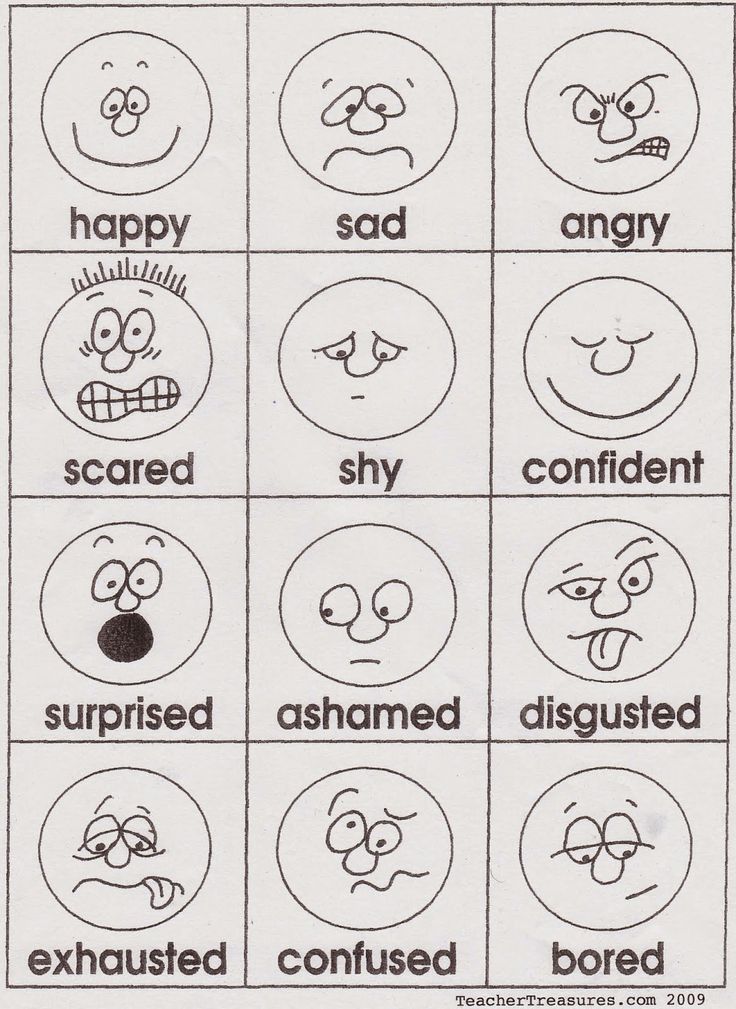

Feeling Dice Game

Create “feeling dice” using clear acrylic photo cubes—slide drawings of faces depicting different emotions on each side. (You could also use photos or cutouts from magazines instead of drawings.) In a small group, give each child a chance to roll the dice. When the dice lands, ask the child to identify the feeling and describe a time when they felt that way.

(You could also use photos or cutouts from magazines instead of drawings.) In a small group, give each child a chance to roll the dice. When the dice lands, ask the child to identify the feeling and describe a time when they felt that way.

How Would You Feel If…

Brainstorm some common scenarios that might elicit different feelings. A few examples:

- “Your grandma picked you up after school and took you get to ice cream.”

- “Your classmate spilled paint on your drawing.”

- “Your mom yelled at you.”

- “Your brother wouldn’t let you have a turn on the swings.”

Put the scenarios in a hat and pass the hat around the circle or small group while you play music. When you stop the music, the child left holding the hat should pick out a scenario (you can help read it for the child if they can’t yet read). Then ask the child to describe how they would feel if the scenario happened to them.

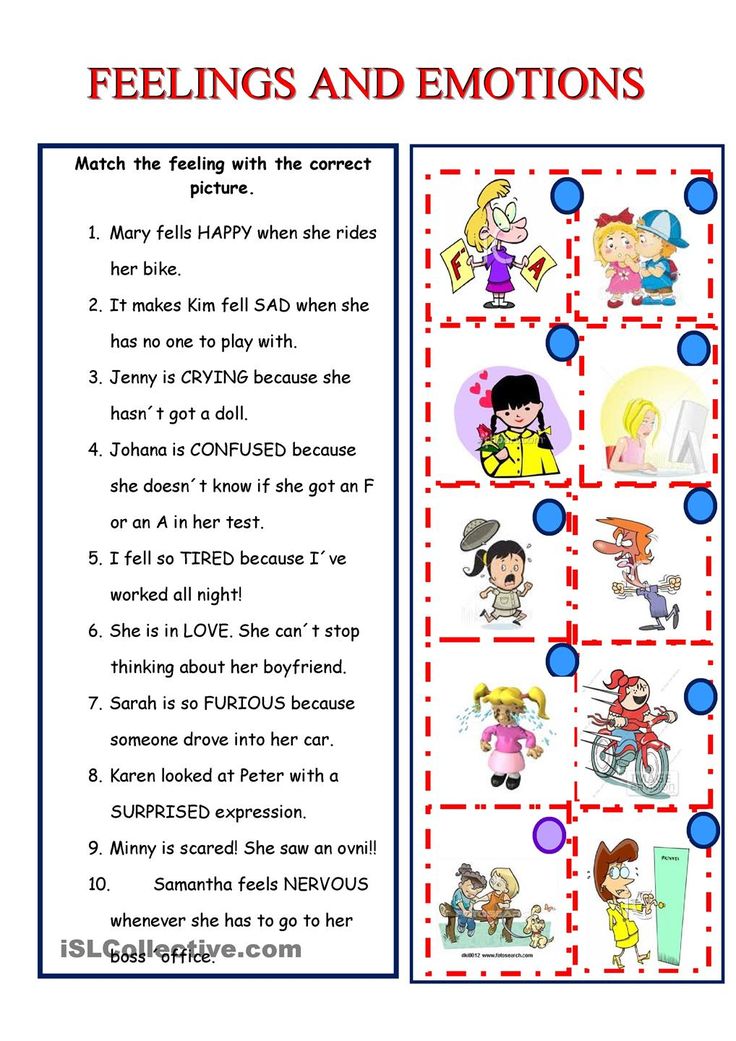

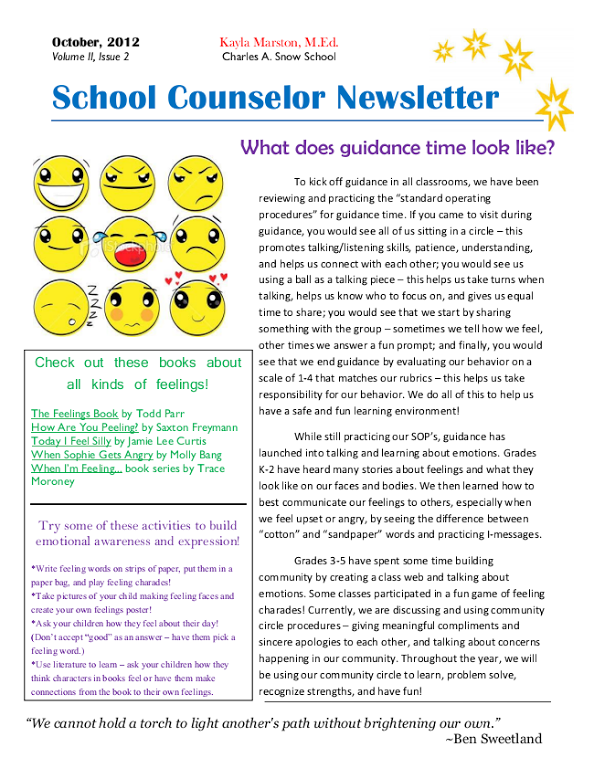

Read & Learn

Choose a book about feelings to share with students, or read a book from the following list of examples: Feelings by Aliki, The Way I Feel by Janan Cain, Feelings by Joanne Brisson Murphy, The Feelings Book by Todd Parr, and My Many Colored Days by Dr. Seuss. Be sure to point out all the actions or ways in which the characters behave when they’re acting on their feelings. Use the following questions to guide your class discussion about emotions:

Seuss. Be sure to point out all the actions or ways in which the characters behave when they’re acting on their feelings. Use the following questions to guide your class discussion about emotions:

- What was one of the feelings the character had?

- Do you think it was a good or not so good feeling?

- What did the character do when he or she was feeling that way?

- Was it an okay or not okay way of showing the feeling?

- Can you think of a time when you felt that way? What kind of face can you make to show that feeling?

Feeling Wheel Game

Create a spinning wheel that features different feeling faces. (Need tips on making one? This blog post shows you how to make spinners for games using items you probably have easy access to.) Give each child a chance to spin the feeling wheel. When the spinner lands on a feeling face, ask the child to identify the feeling and talk about an incident that made them feel that emotion.

Emotion Charades

Form small groups of students. Using laminated cards with illustrations of feelings on them, a large die, or a beach ball with each stripe of color preassigned to represent a feeling, have students act out the face and body clues that show the feeling they drew or rolled. Ask the other students to take turns guessing which feeling is being acted out.

Feeling Face Bingo

Create feeling face bingo boards for your students, each with 12 squares that feature various feeling faces (you can add more squares for older children). Have children draw a feeling name from a bag and then cover the matching feeling face with the paper that was drawn from the bag. When they cover a face, they can talk about events or memories that made them feel that emotion.

Puppet Play

Puppet play is a good activity to try one-on-one or in small groups to help children explore and express their feelings, ideas, and concerns. Many children find it easier to talk about feelings during puppet play, because it can give them some distance from scary or upsetting issues.

Many children find it easier to talk about feelings during puppet play, because it can give them some distance from scary or upsetting issues.

Encourage children to pick up a puppet and be its voice while you or another child or adult adopts the character of another puppet. You can discuss the children’s feelings indirectly and offer another point of view through your puppet. Reversing the characters so that children play another role can also promote empathy by helping kids experience how others feel.

Feelings Collage

For this activity, you’ll need old magazines and some basic art supplies: posterboard or construction paper, scissors, and glue sticks. Invite your students to cut pictures from the magazines of people expressing any kind of feeling, and instruct them to use these images to build a “feelings collage.” Hand out markers and ask students to label each picture in their collage with a feeling word; then, have them take turns explaining their collages and feeling labels to the group. Encourage your students to elaborate on the details of what they noted regarding the person’s facial expression, their body language, or the context of the photo or illustration. When the activity is over, let your students take the collages home and post them in a prominent place so they can practice identifying and labeling their own feelings.

Encourage your students to elaborate on the details of what they noted regarding the person’s facial expression, their body language, or the context of the photo or illustration. When the activity is over, let your students take the collages home and post them in a prominent place so they can practice identifying and labeling their own feelings.

Feeling Face Memory Game

Make 12 pairs of matching feeling faces—you can draw them or find appropriate photos or illustrations to print out. Turn over the face cards and arrange them in a grid. Ask each child to turn two cards over to try to get a match. When they find a match, they can say what the feeling is and describe a time when they felt that way. They set the match aside, and children keep going until all matches are found.

***

Try these games and activities with your students (or at home with your own children), and let us know which ones worked best for you! And for more ways to help promote healthy social-emotional development in young children, check out the books we adapted this week’s post from:

Activity 1: Adapted from Merrell’s Strong Start—Grades K–2, by Sara A. Whitcomb, Ph.D., & Danielle M. Parisi Damico, Ph.D.

Whitcomb, Ph.D., & Danielle M. Parisi Damico, Ph.D.

Activities 2, 3, 5, 7, and 10: Adapted from Unpacking the Pyramid Model, edited by Mary Louise Hemmeter, Ph.D., Michaelene M. Ostrosky, Ph.D., & Lise Fox, Ph.D.

Activity 8: Adapted from Pathways to Competence, Second Edition, by Sarah Landy, Ph.D.

Activities 4, 6, and 9: Adapted from Merrell’s Strong Start—Pre-K, by Sara A. Whitcomb, Ph.D., & Danielle M. Parisi Damico, Ph.D.

Sign up for one of our FREE newsletters

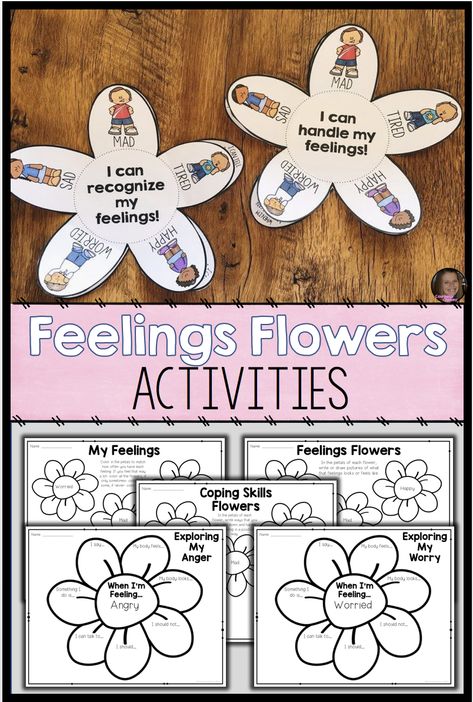

Super Fun Activities to Help Kids Recognize Big Emotions

5008 shares

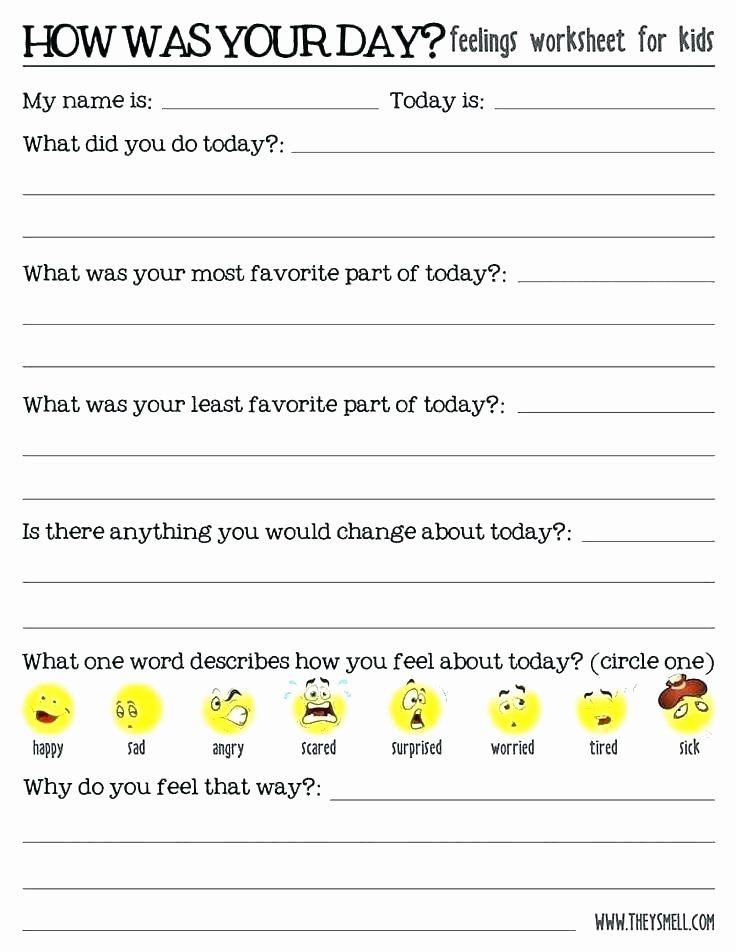

Just the other day we were sitting at the kitchen table when I heard the oldest scream at his three-year-old sister… STOP IT! Immediately, time seemed to stop. I didn’t see her do anything, I didn’t see the build up. What in the world could have gotten into this kid to make him haul off on his sister like that seemingly out of the blue?

What in the world could have gotten into this kid to make him haul off on his sister like that seemingly out of the blue?

I wanted to say “Woah, hold up, buddy. Don’t talk to your sister that way.” Instead, I could see the irritation on his face and could see he was struggling to even identify with the emotions he was having, so I held my tongue, took a deep breath and gently said, “That was really loud. It seems like you might be angry with your sister. Would you agree with this feeling?”

With big tears in his eyes, he looked up at me and said, “No. I’m not angry. I don’t know what’s wrong.” It was then I realized that my son didn’t actually have the words to describe how he was feeling and he didn’t know how to retrieve the solutions from his toolbox in the heat of the moment.

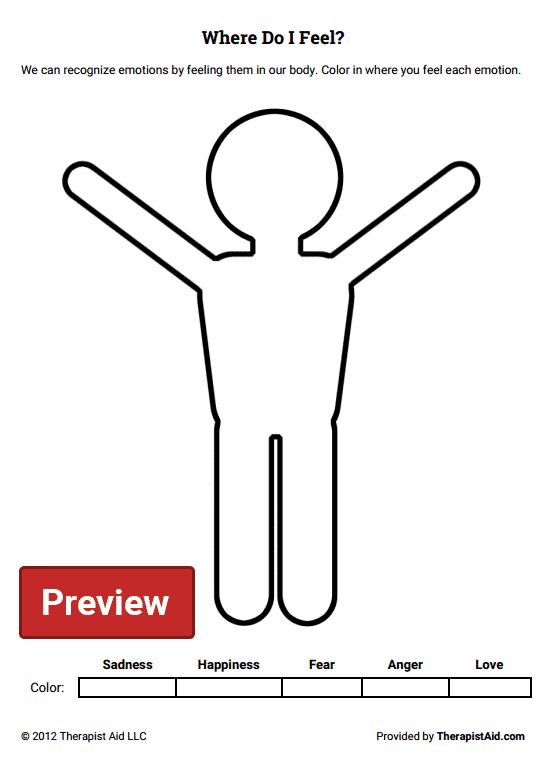

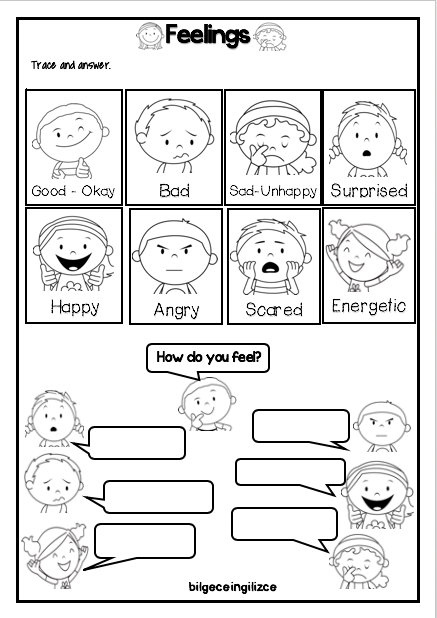

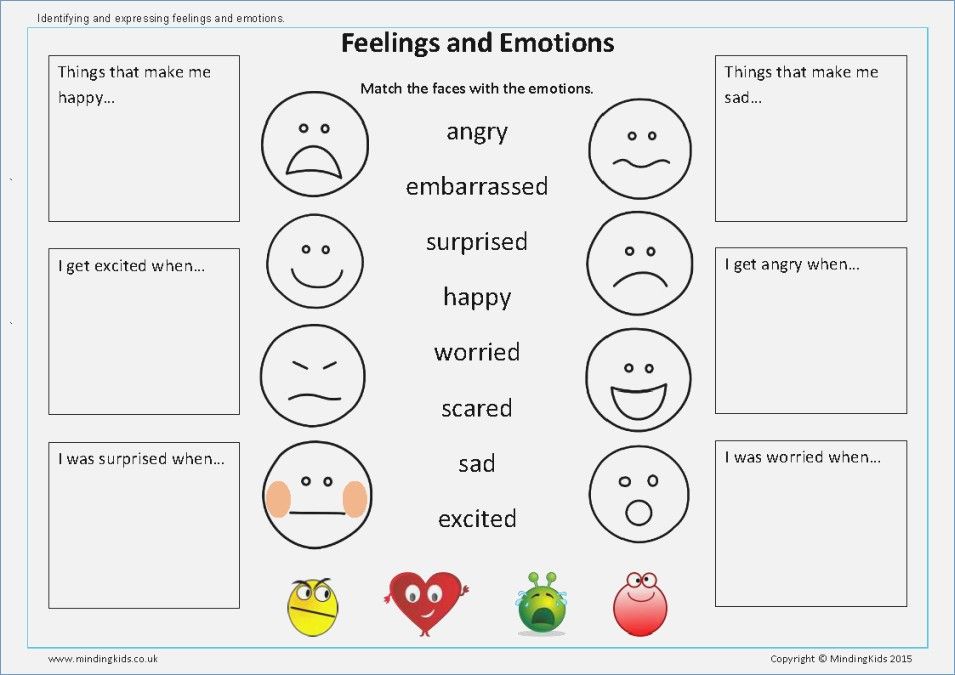

Kids feel emotions in big ways! Coaching kids through these emotions is important in helping them grow and work through anxiety or anger. And thankfully, we have these super fun activities to help kids recognize big emotions.

Teaching emotions can be done in a variety of fun ways. Printables, books and hands-on play activities all help teach the concepts of feelings and develop emotionally intelligent kids.

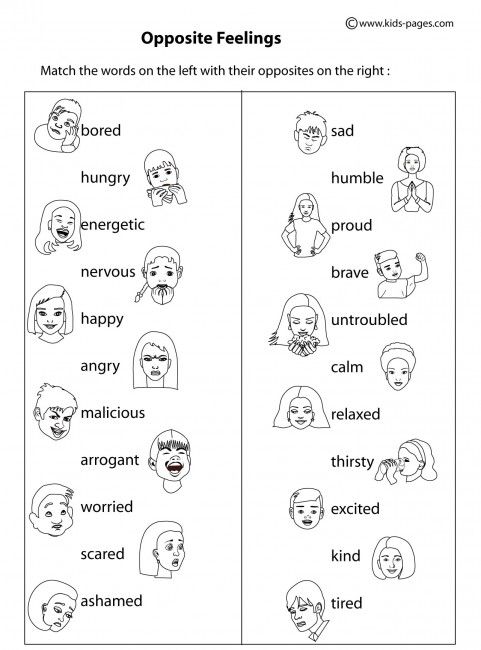

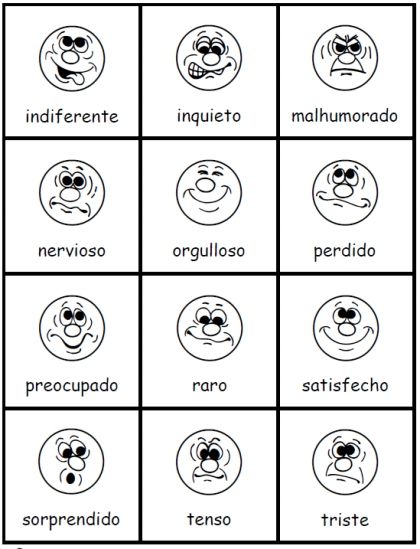

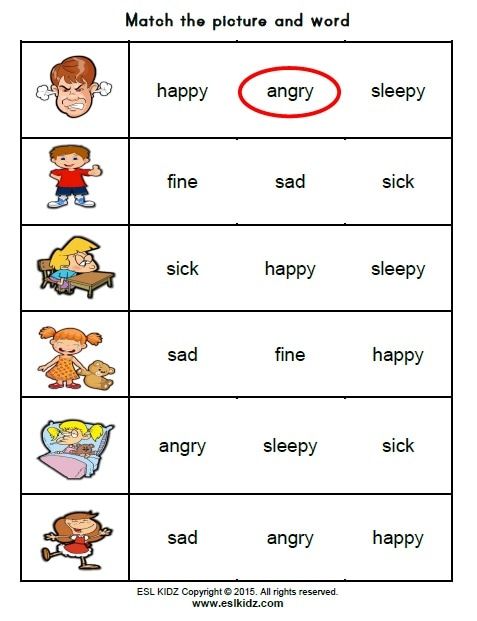

Printables to Help Kids Recognize Emotions

Monstrous Emotions Printable| Lemon Lime Adventures

Exploring Emotions Game with Free Printable Cards| Sunny Day Family

Lego Drawing Emotions with Printable | Little Bins for Little Hands

Emotions Free Printable Board Game| Life Over C’s

Ceasar the Emotional Dragon | Lemon Lime Adventures

Printable Pumpkin Faces Matching Activity| Practical Mommy

Emotions Printable Card Game| Childhood 101

The Emotional Robot| Lemon Lime Adventures

Managing Your Feelings Printable Pack | Kori at Home

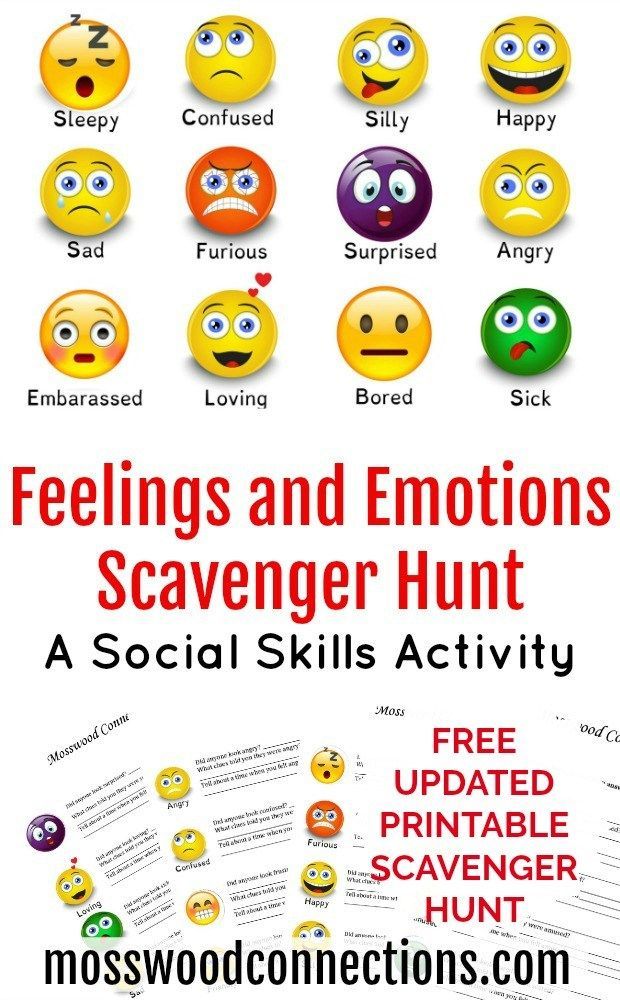

Activities and Games to Help Kids Recognize Big Emotions

Emotions Discovery Bottles | LalyMom

How Am I Feeling Social Skills Game | Mosswood Connections

Toddler Emotions Activity | Schooling a Monkey

Teaching Kids Emotional Intelligence | Mama Smiles

Guess the Emotion Game | School Time Snippets

Exploring Emotions Jenga Game | Childhood 101

Apple Puppets and Story about Feelings | Best Toys for Toddlers

Click here to print a FREE Robot Emotions Printable

Activities with Books to Help Kids Recognize Emotions

Write a Color Poem: Inspired by My Many Colored Days | School Time Snippets

Simple Lesson About Emotions | Bambini Travel

Quick as a Cricket Empathy Activity | Sugar Aunts

Managing Emotions with Free Printables & Books | Natural Beach Living

However you choose to help kids recognize emotions, you are building the foundation for strong social and emotional development. Just know that the work that you’re doing now will pay off ten-fold when your kids have the emotional intelligence to recognize their own emotions and triggers and start to self-regulate!

Just know that the work that you’re doing now will pay off ten-fold when your kids have the emotional intelligence to recognize their own emotions and triggers and start to self-regulate!

I’d love to know! What are some of your favorite ways to teach and learn about emotions?

The Complete Printable Calming Kit For Kids

Want even more resources for calming your child in all the right places?

We are excited to announce our NEW Monthly Calming Printable Kits. This month we have created a Robot Themed Printable Calming Kit that is complete with a visually calming activity pack, a feelings kit, a sensory break kit and bonus coloring pages.

You can get all of these resources for one low bundle price! To make it even more awesome, we have created 2 more bundles that include our new Robot chewable jewelry and our Wacky Tracks fidgets.

Perfect for home, school or on the go! Calm worries, wiggles and sensory overload with this complete Robot Calming Kit, perfect for all ages.

Click here to learn more about the Complete Robot Printable Calming Bundle

Resources for Helping Angry and Anxious Kids Self-Regulate

15 Simple Sensory Activities That Teach Mindfulness

7 Simple Mindfulness Exercises to Calm an Angry Child

5 Simple Breathing Exercises to Calm an Angry Child

A Surprising New Way of Eating that Will Calm Your Angry Child

Two Simple Steps to Help Kids Recognize Emotions

7 Simple Mindfulness Hacks To Calm an Anxious Child

3 Simple Ways to Help Toddlers Get Along

For more Adventures in Emotions, Check Out:

Russian scientists have determined the ability to recognize emotions based on brain signals

02 September 2022 16:18 Olga Muraya

Knowing what changes occur in the brain when recognizing emotions, scientists will be able to offer new methods of therapy for a number of mental disorders.

Photo Unsplash.

Researchers from the HSE and SFU have developed a new technique that will allow people to learn to better recognize emotions in faces.

Scientists from the Higher School of Economics (HSE), together with specialists from the Southern Federal University (SFU), have developed an approach that will help in studying the perception of human emotions by facial expressions.

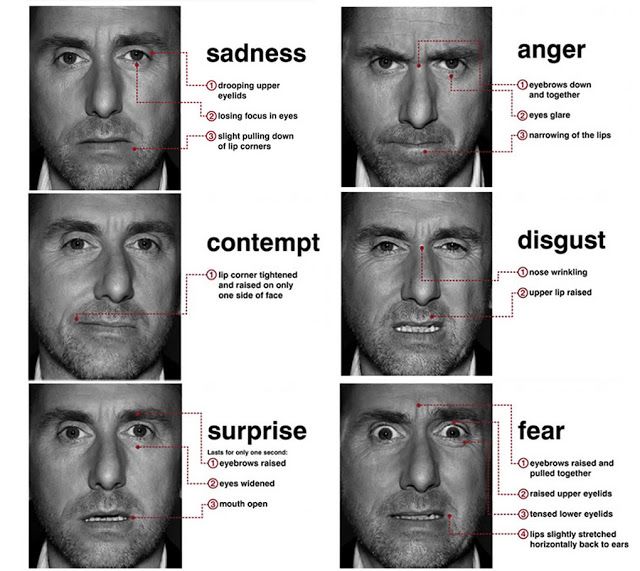

We note right away that the new method uses animated images to demonstrate emotions, not photographs. This makes it easier for scientists to classify certain emotions.

Recognition of specific emotions during the experiment is determined by the characteristic bursts of electrical activity of the brain, visible on the electroencephalogram (EEG).

The new method, according to Russian scientists, will help in the study of people who are bad at reading emotions from facial expressions. This is typical for disorders of the emotional sphere, including depression and anxiety, as well as autism, schizophrenia and ADHD - attention deficit hyperactivity disorder.

This is typical for disorders of the emotional sphere, including depression and anxiety, as well as autism, schizophrenia and ADHD - attention deficit hyperactivity disorder.

Emotions and the ability to read them correctly is a skill necessary for survival even in the animal world, not to mention the world of human personal, social and professional relationships. People who cannot correctly assess the emotions of others by their appearance are often condemned, and generally have difficulty interacting with society.

Therefore, researchers from Russia have developed an approach designed to simplify and speed up the study of emotional disorders. This will potentially help to find new methods of developing the ability to recognize emotions.

Usually in such experiments, scientists use photographs in which people depict one or another typical emotion. Researchers from the HSE and SFedU decided to make the experiment more realistic and use animated images.

With the help of morphing technology, which creates a visual effect of a smooth transformation of one object into another, scientists have received 48 videos - morphs.

Each morph consisted of 12 frames, where the main emotions smoothly replaced each other: anger, sadness, joy, disgust, surprise and fear. The videos were identical in style and length thanks to computer animation.

Such uniformity could not be achieved with video recording. And the identity of the videos that are shown to different people is very important for the correct interpretation of the results.

The experiment involved 112 people, among them were 59% men and 41% women.

Participants were shown 144 moving images created from 56 frontal color photographs of four men and four women. The subjects said the emotion they saw aloud into the microphone - another innovation of the study, which made it possible to realistically assess the speed of recognition of a particular emotion.

During this experiment, scientists tracked how volunteers' brain activity changed with correct and incorrect emotion recognition.

As a result, two groups of subjects were formed. Representatives of the first group recognized facial expressions more accurately and faster, while representatives of the second group more slowly and less accurately. But in both groups, the morphing method proved to be effective: scientists found the expected brain responses in areas of the brain associated with vision in general (occipital regions) and the perception of faces in particular (temporal regions).

Representatives of the first group recognized facial expressions more accurately and faster, while representatives of the second group more slowly and less accurately. But in both groups, the morphing method proved to be effective: scientists found the expected brain responses in areas of the brain associated with vision in general (occipital regions) and the perception of faces in particular (temporal regions).

The new technology made it possible to estimate the time of onset of each phase of recognition of facial expressions and the degree of load (use of brain resources) in each of them.

It turned out that the faster the recognition occurred, the greater the load. At the same time, in order to successfully complete the task, people with lower abilities expended more brain resources.

The information obtained helped scientists create a system that recognizes emotions according to EEG data with an accuracy of 76%. The authors of the work hope that this technique will allow physicians to develop more effective approaches for the rehabilitation of people with impaired emotion recognition in the future.

A study by Russian scientists was published in Applied Sciences on August 2, 2022.

Earlier we wrote that you can develop the skill of face recognition in just 6 minutes.

We also talked about how our own heartbeat helps us better perceive the emotions of other people.

More news from the world of science can be found in the "Science" section of the "Looking" media platform.

science brain emotions recognition news Russia

Emotion recognition in telephone conversation recordings / Sudo Null IT News

Emotion recognition technology in speech can be used in a huge number of tasks. In particular, this will automate the process of monitoring the quality of customer service of call centers.

Determining a person's emotions from their speech is already a relatively saturated market. I considered several solutions from companies in the Russian and international markets. Let's try to figure out what their advantages and disadvantages are.

Let's try to figure out what their advantages and disadvantages are.

1) Empath

Japanese startup Empath was founded in 2017. He created the Web Empath platform based on algorithms trained on tens of thousands of voice samples from the Japanese medical technology company Smartmedical. The disadvantage of the platform is that it only analyzes the voice and does not try to recognize the speech.

Emotions transmitted by a person through a text and voice channel often do not match. Therefore, sentiment analysis for only one of the channels is insufficient. Business conversations, especially, are characterized by restraint in the manifestation of emotions, therefore, as a rule, positive and negative phrases are pronounced in an absolutely emotionless voice. However, there are also opposite situations when words do not have an emotional color, and the voice clearly shows the mood of a person.

Cultural and linguistic features also have an important influence on the form of manifestation of the emotional state. And attempts at multilingual classification of emotions demonstrate a significant decrease in the efficiency of their recognition [1]. However, such a solution exists, and the company has the opportunity to offer its solution to customers around the world.

And attempts at multilingual classification of emotions demonstrate a significant decrease in the efficiency of their recognition [1]. However, such a solution exists, and the company has the opportunity to offer its solution to customers around the world.

2) Speech Technology Center

As part of the Smart Logger II software product of the STC company, there is a QM Analyzer speech analytics module that allows you to automatically track events on the telephone line, the speech activity of speakers, recognize speech and analyze emotions. To analyze the emotional state, QM Analyzer measures the physical characteristics of the speech signal: amplitude, frequency and time parameters, searches for keywords and expressions that characterize the speaker's attitude to the topic [2]. When analyzing the voice for the first few seconds, the system accumulates data and evaluates what tone of the conversation was normal, and then, starting from it, fixes changes in tone in a positive or negative direction [3].

The disadvantage of this approach is the incorrect definition of the normal tone in the case when, already at the beginning of the recording, speech has a positive or negative emotional color. In this case, the estimates for the entire record will be incorrect.

3) Neurodata Lab

Neurodata Lab develops solutions that cover a wide range of areas in the field of emotion research and recognition in audio and video, including technologies for voice separation, layer-by-layer analysis and voice identification in an audio stream , complex tracking of body and hand movements, as well as detection and recognition of key points and facial muscle movements in a video stream in real time. As one of their first projects, the Neurodata Lab team collected the Russian-language multimodal RAMAS database, a comprehensive data set on experienced emotions, including parallel recording of 12 channels: audio, video, oculography, wearable motion sensors, and others - about each of the situations of interpersonal interaction. Actors recreating various situations of everyday communication took part in the creation of the database [4].

Actors recreating various situations of everyday communication took part in the creation of the database [4].

Based on RAMAS, using neural network technology, Neurodata Lab created a solution for contact centers that allows you to recognize emotions in the voice of customers and calculate the service satisfaction index directly during a conversation with an agent. In this case, the analysis is carried out both at the voice level and at the semantic level, when translating speech into text. The system also takes into account additional parameters: the number of pauses in the operator's speech, the change in voice volume and the total talk time.

However, it is worth noting that the database for training the neural network in this solution was prepared specifically with the participation of actors. And, according to research, the transition from model emotional bases to recognition of emotions in spontaneous speech leads to a noticeable decrease in the efficiency of algorithms [1].

As you can see, each solution has its pros and cons. Let's try to take all the best from analogues and implement our own service for analyzing phone calls.

I chose the five most frequently used signs:

-

Mel-frequency Kepstral coefficients (MFCC)

-

Color vector

-

Mel-spectrogram 9000

-

Spectral contrast

901TOTAL CONTRAL CONTRA

Based on the segments selected from the recordings of telephone conversations, I compiled 3 variants of data sets with a different number of distinguished emotion classes. Also, to compare the learning outcomes, the Berlin database of emotional speech Emo-DB, created with the involvement of professional actors, was taken.

MCartEmo-admntlf

7

324

KNeighborsClassifier

49. 231%

231%

MCartEmo-asnef

5

373

GradientBoostingClassifier

49.333%

0003

BaggingClassifier

55.294%

With an increase in the number of selected emotion classes, the recognition accuracy decreased. This may also be due to a decrease in the sample due to the complexity of markup for a large number of classes.

Next, I tried to train a convolutional neural network on the MCartEmo-pnn dataset. The optimal architecture turned out to be the following.

The recognition accuracy of such a network was 62.352%.

Next, I worked on expanding and filtering the data set, as a result of which the number of records increased to 566. The model was retrained on these data. As a result, recognition accuracy increased to 66.666%. This indicates the need for further expansion of the data set, which will lead to an increase in the accuracy of voice recognition of emotions.

The model was retrained on these data. As a result, recognition accuracy increased to 66.666%. This indicates the need for further expansion of the data set, which will lead to an increase in the accuracy of voice recognition of emotions.

When designing the service, a microservice architecture was chosen, within which several narrowly focused services that are independent of each other and solve only one task are created. Any such microservice can be separated from the system, and after adding some logic, it can be used as a separate product.

The Gateway API service performs user authentication according to the JSON Web Token standard and acts as a proxy server, directing requests to functional microservices located in a closed loop.

The developed service was integrated with Bitrix24. For this, the Speech Analytics application was created. In terms of Bitrix24, this is a server application or an application of the second type. Such applications can access the Bitrix24 REST API using the OAuth 2.0 protocol, as well as register their event handlers. Therefore, it was enough to add routes for installing the application (in fact, registering a user), deleting the application (deleting a user account) and the OnVoximplantCallEnd event handler, which saves the results of the analysis of records in the cards of CRM entities associated with calls. As a result, the application adds a transcript of the recording to the call and a comment with an assessment of the success of the conversation on a five-point scale with an attachment of a graph of changes in the emotional state for each participant in the conversation.

Such applications can access the Bitrix24 REST API using the OAuth 2.0 protocol, as well as register their event handlers. Therefore, it was enough to add routes for installing the application (in fact, registering a user), deleting the application (deleting a user account) and the OnVoximplantCallEnd event handler, which saves the results of the analysis of records in the cards of CRM entities associated with calls. As a result, the application adds a transcript of the recording to the call and a comment with an assessment of the success of the conversation on a five-point scale with an attachment of a graph of changes in the emotional state for each participant in the conversation.

Conclusion

The paper presents the result of a study on the recognition of emotions in speech, during which, based on Russian-language recordings of telephone conversations, a data set of emotional speech was created, on which CNN was trained. The recognition accuracy was 66.66%.

A web service has been implemented that allows you to clean audio recordings from noise, diarization, transcription and analysis of emotions in audio recordings or text messages.

The service has been improved so that it can also be used as a Bitrix24 application.

This work was commissioned by MCC Art as part of the bachelor's degree program "Neurotechnologies and Programming" at ITMO University. Also on this topic, I had a report at the X CMU and an article was accepted for publication in the Proceedings of the Congress.

In the near future, work is planned to improve the accuracy of emotion recognition by voice by expanding the data set for training the neural network, as well as replacing the diarization tool, since the quality of its work in practice was not good enough.

List of sources-

Davydov, A. Classification of the speaker's emotional state by voice: problems and solutions / A. Davydov, V. Kiselev, D. Kochetkov // Proceedings of the international conference "Dialogue 2011.". - 2011. - S. 178-185.

-

Smart Logger II. The evolution of multichannel recording systems. From call registration to speech analytics [Electronic resource].

Learn more