Assessing guided reading

Guided Reading Assessments: 2 Simple Ways to Track How Students Are Doing

Guided reading time goes by SO quickly. If you’re like me, you want to make the most of that time – but also monitor how students are doing!

Guided reading assessments can be difficult to implement…but they don’t have to be!

In this blog post, I’ll share two simple ways to track how students are doing during guided reading.

These assessments will give you plenty of useful data to guide your instruction!

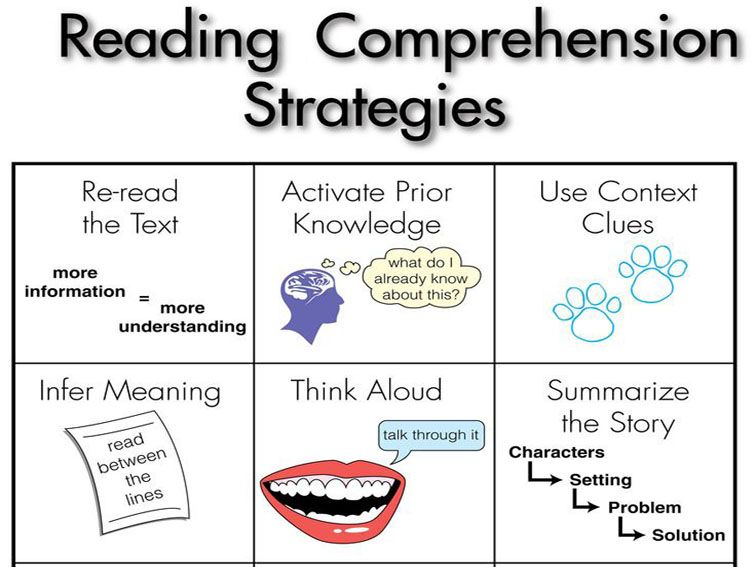

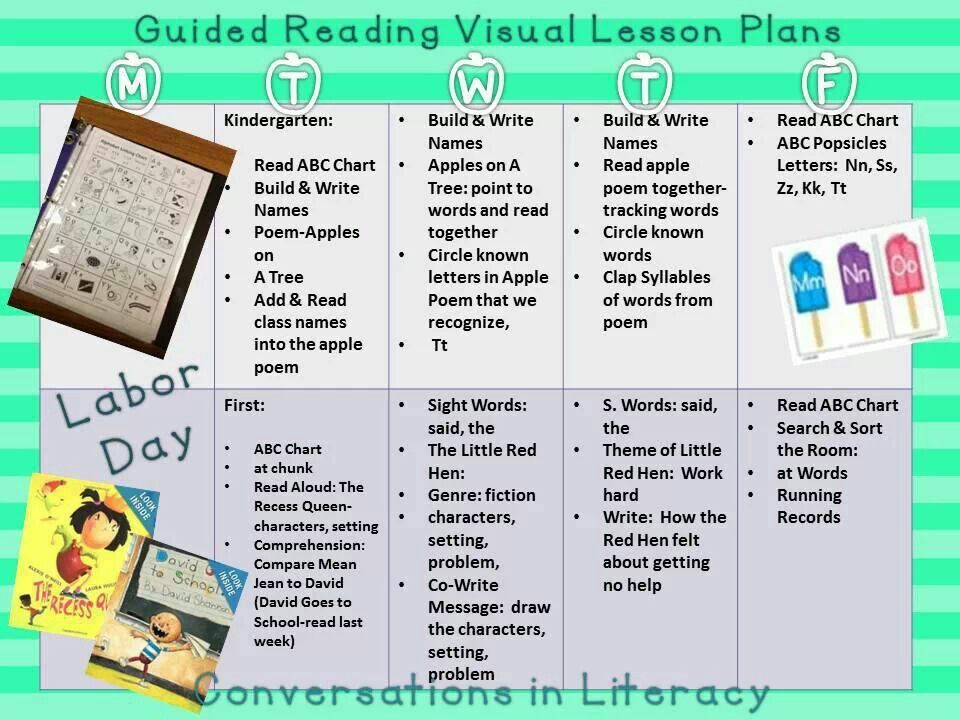

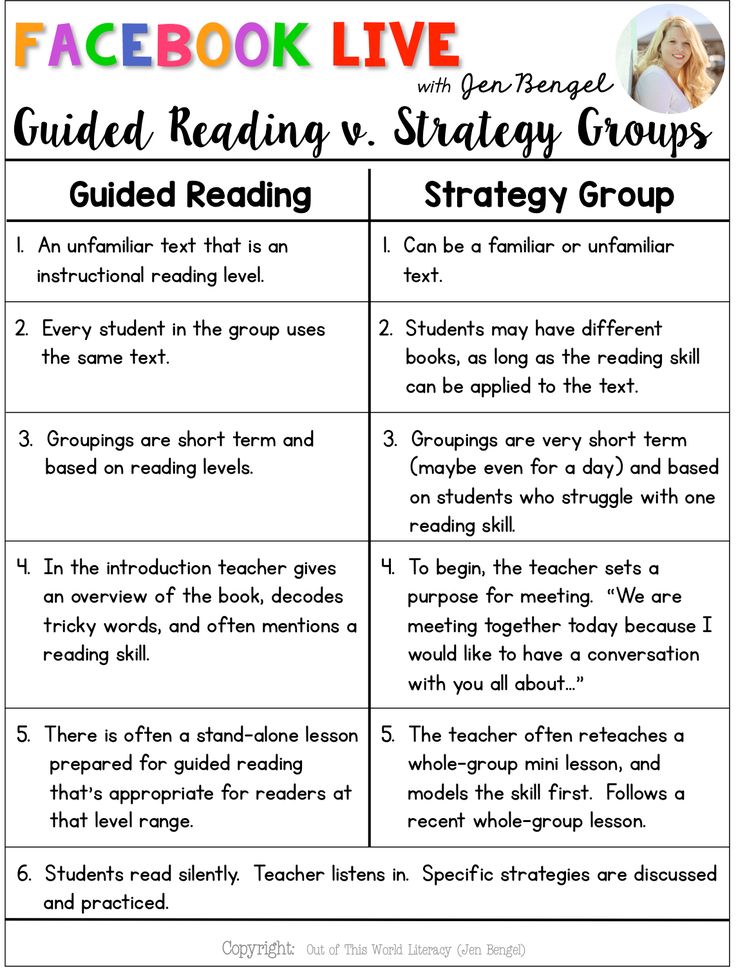

Method #1: Running RecordsAt the beginning of each guided reading group, I take a running record of one student’s reading. (If you’re not sure how to take a running record, please read this post.)

Meanwhile, the other students quietly re-read familiar texts. This is awesome for fluency!!

I have one student sit next to me while the others are reading. I hand them the book that we read during the previous guided reading lesson.

I have the student read all of the book to me (for lower level books only) or some of the book (about 150 words).

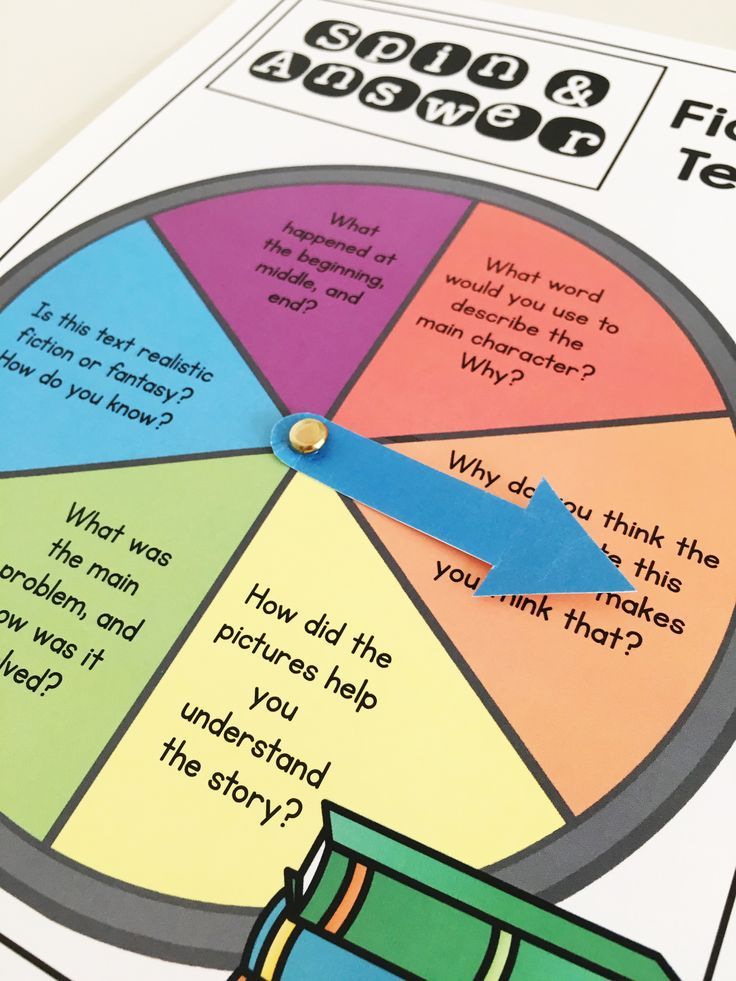

I then ask the child to retell. I try and ask one inferential (higher level) comprehension question, too.

I record the child’s fluency on a scale from 1-3 (1 being disfluent, 3 being fluent).

And that’s it! This whole process only takes about 5 minutes.

I stick the running record form in my binder, and get started with the rest of the students.

Later, on Thursdays, when I plan for the following week of guided reading, I review the running records.

If I haven’t had a chance to calculate accuracy and self-correction rates, I do it then.

I may not have a running record for every single student from that week, but I’ll have a couple of running records from each group. (I rotate through students, so I get a running record for each student about every 2-3 weeks.)

From my running records, I can make decisions about:

- What books to choose for upcoming lessons

- Phonics patterns that students need to work on

- Which strategies to focus on

- Whether or not any students need to change reading groups (read this blog post to help you figure out when to move students up a guided reading level)

In addition to taking that running record toward the beginning of my guided reading lesson, I like to take notes and make observations during the rest of the group time.

However…I’ve often found that REALLY HARD to do! Even with the best intentions, I don’t end up taking many notes. I’m busy supporting students in the group!

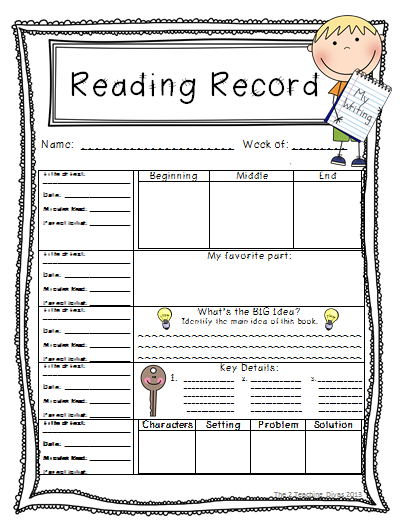

So I ended up creating my guided reading checklists.

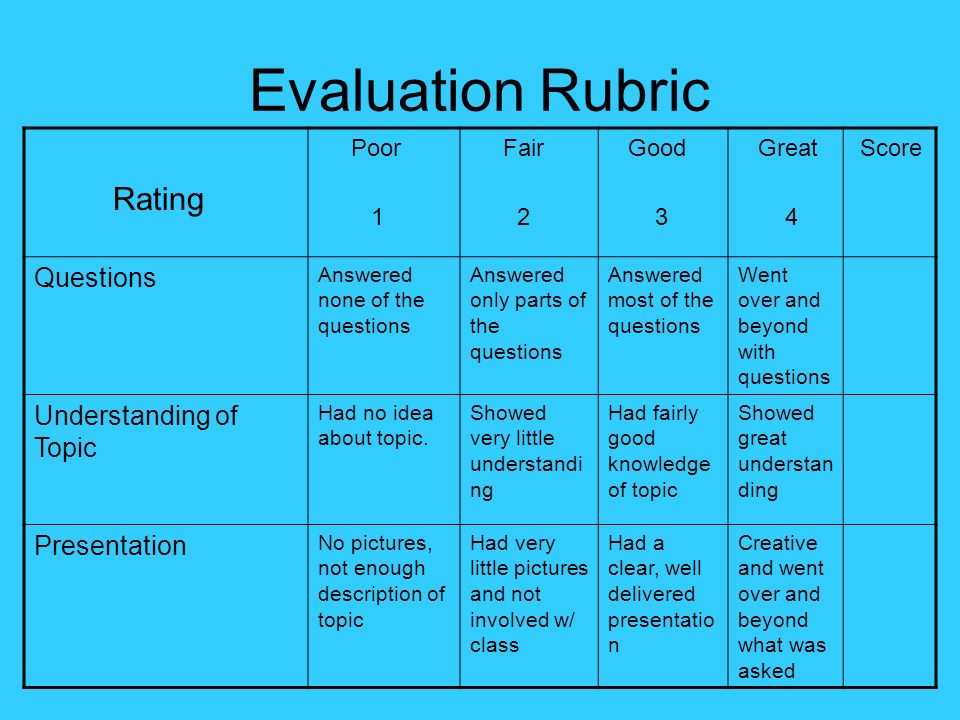

These checklists list out skills that students are focusing on at each individual level. Rather than having to write out complete sentences, I simply make checkmarks or ratings

I usually work on 1-2 checklists per group each time we meet. In other words, I’m not trying to fill out a complete checklist for all 5 students! Sometimes I don’t even complete a full checklist for one student.

Just like the running records, these checklists can help you make instructional planning decisions and adjustments to your groups.

Download Assessment FormsIf you’d like to use the same guided reading checklists that I do, you can grab my Kindergarten, first grade, or second grade set here:

And if you’d like to download a free running record form (and other guided reading goodies), click HERE.

Happy teaching!

Next Step Guided Reading Assessment

Next Step Guided Reading Assessment

Data Management

Assess, Decide, Guide

Assess

Pinpoint your teaching focus, select texts, and plan and teach powerful lessons in four easy steps.

Decide

Use data to determine students’ reading levels, form instructional groups, and create effective action plans for student learning goals.

Guide

Access professional development tools, resources, and videos online to plan personalized instruction with lessons to match reading stages.

LOAD MORE

Get to know your readers in

four easy steps

1 . Reading Interest Survey (whole class)

Uncover students’ reading interests to match readers to just-right texts.

2. Word Knowledge Inventory (whole class)

Determine skills in phonological awareness and phonics to inform your word study instruction.

3. Comprehension Assessment (whole class)

Evaluate students' higher-level thinking skills and identify students who need immediate support. (For Grades K–2, this is administered as a read-aloud assessment.)

4. Reading Assessment Conference (one on one)

Obtain precise data on phonics, word recognition, fluency, and comprehension to determine instructional levels and identify skills and strategies to target during guided reading lessons.

Meet the Authors

Jan Richardson, Ph.D., is an educational consultant who has trained thousands of teachers and works with schools and districts to ensure that every student succeeds in reading. Her work is informed by her experience as a reading specialist, a Reading Recovery teacher leader, a staff developer, and a teacher of every grade from kindergarten through high school.

Maria Walther, Ed. D., is an author and expert in literacy with more than three decades of experience as a first-grade teacher in the Chicago area. She was honored as Illinois Reading Educator of the Year and earned the ICARE for Reading Award for fostering a love of reading in children.

D., is an author and expert in literacy with more than three decades of experience as a first-grade teacher in the Chicago area. She was honored as Illinois Reading Educator of the Year and earned the ICARE for Reading Award for fostering a love of reading in children.

Testimonials

Targets Instruction Efficiently

An all-in-one product that simplifies and clarifies the complex process of assessing and teaching reading. Gives teachers a full picture of a reader so they can target instruction more efficiently and effectively.

Rosanne L. Kurstedt, Ph.D.

Educational Consultant and Staff Developer

Westfield, NJ

Simple Yet Effective

The Assess-Decide-Guide framework is simple yet effective, and will undoubtedly increase the effectiveness of core instruction.

Terry J. Dade

Assistant Superintendent

Fairfax County Public Schools, VA

Clear and Pragmatic

The results have been amazing. Guided reading instruction is no longer a puzzle. Teachers are thrilled with the clarity and pragmatic shifts in their practice.

Guided reading instruction is no longer a puzzle. Teachers are thrilled with the clarity and pragmatic shifts in their practice.

Ellen Lewis

Reading Specialist

Fairfax County Public Schools, VA

Valuable Teaching Points

Valuable teaching points can be derived from the simple checklists for decoding and retelling, as well as from the quality comprehension questions. The assessment texts are appropriately leveled and of high interest to kids, making this assessment a breeze to administer!

Melanie E. Smith

Reading Specialist, NBCT

South Salem Elementary School, Salem, VA

Managed Read Constructor - Didactor

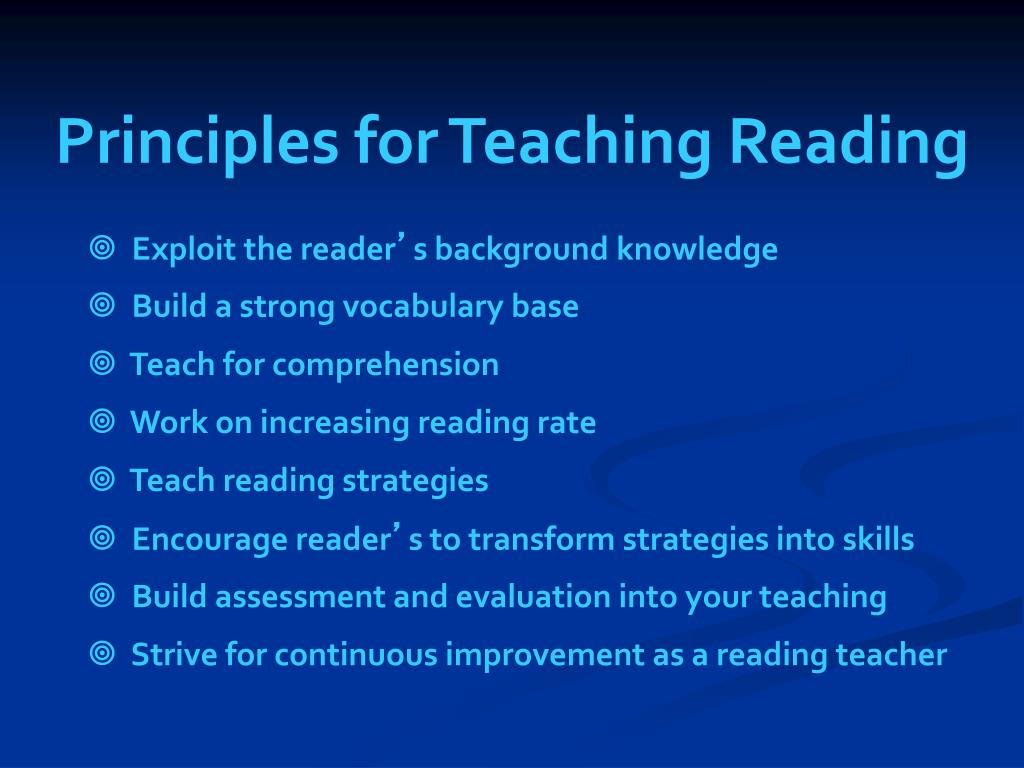

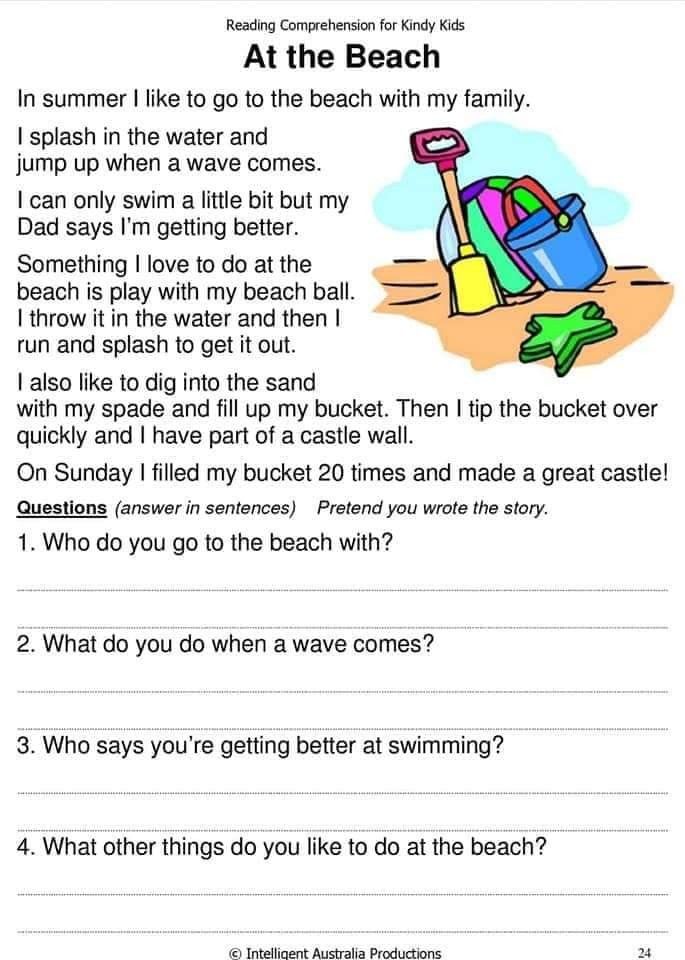

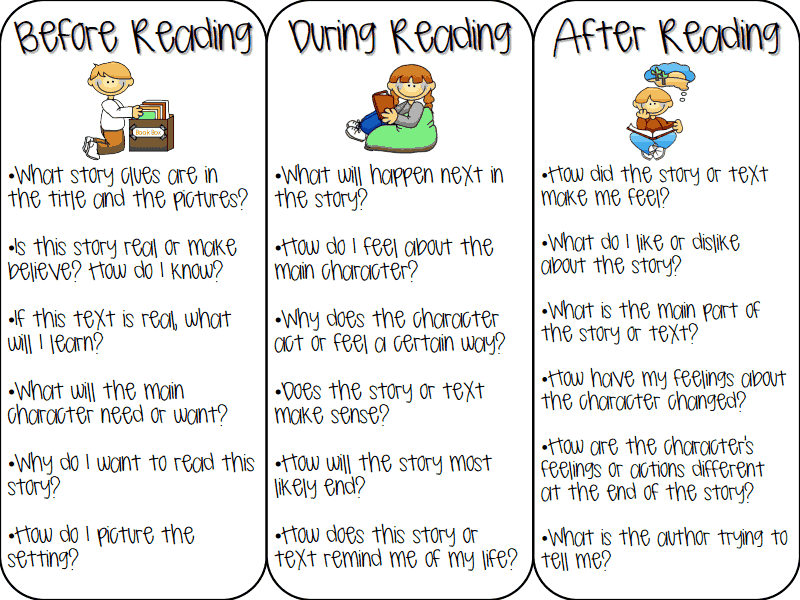

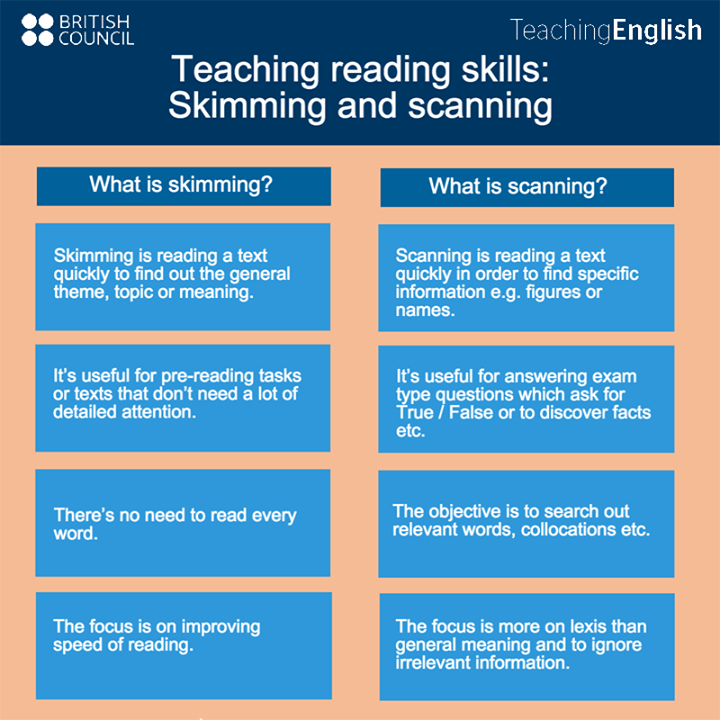

Quite often in elementary school, and even in grades 5-6, students need to be taught to carefully read . It is very good if, after reading a paragraph, the teacher asks a question about what was read or a question related not only to this, but also to the repetition of what has been passed.

It is very good if, after reading a paragraph, the teacher asks a question about what was read or a question related not only to this, but also to the repetition of what has been passed.

Of course, such guided reading is necessary when the text presents certain difficulties for the student. And a pause is needed, a question is needed to comprehend what has been read.

These may be foreign language lessons, where not all words are always known to the student. It can be any other academic subject where a number of unfamiliar words, terms have appeared, and it is necessary to pay attention to how the student understands them.

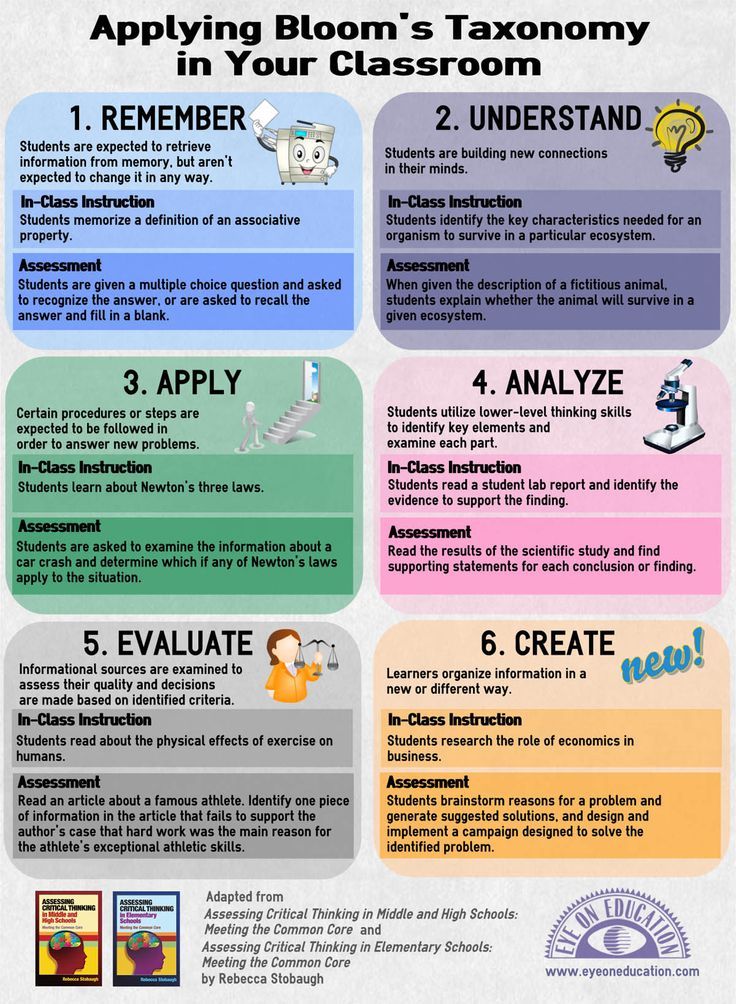

Guided reading keeps the student's attention to the text. Thanks to this technique, you can lay the foundations for critical comprehension of what you have read. That is, this technique can become an integral part of the technology of critical thinking .

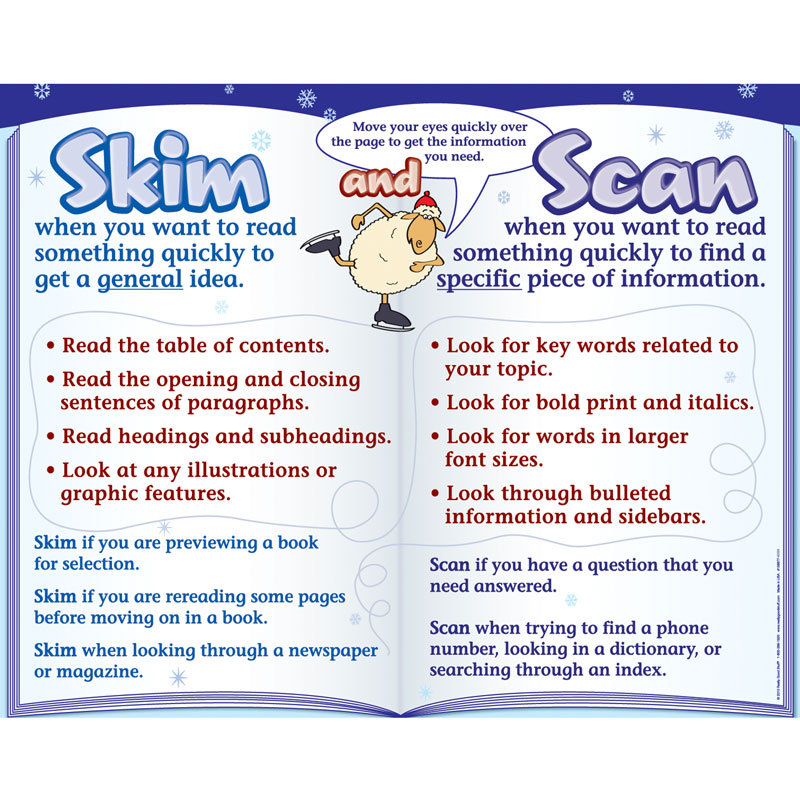

Offering Guided Read Designer done in PowerPoint . It is a text where, after a certain sentence or paragraph, students must answer the question posed by the teacher. The task is presented in the form of a multiple choice test. After the correct answer, the student can continue reading. See how it goes.

It is a text where, after a certain sentence or paragraph, students must answer the question posed by the teacher. The task is presented in the form of a multiple choice test. After the correct answer, the student can continue reading. See how it goes.

You can download the constructor itself here . To use it for your own purposes, it is enough to change the text and tasks. Move the question buttons if necessary. The first click on the button is a hyperlink. Thanks to her, the student starts reading the next sentence or paragraph and answers the question posed.

The task appears after pressing the question number again. In this case, the question button is no longer a hyperlink, but a trigger.

Of course, you need to place the correct answer where the green signal appears. Move the rectangle with the correct answer to another place on the next slides.

If you need to increase the number of questions, copy the last slide and paste below, adding the corresponding button.

- to improve literacy of students. You can enter the text with an error and ask students to enter the correct option;

- to select synonyms or antonyms for the specified word;

- for reading grades when the teacher asks the student to express their own opinion on what they have read.

I hope teachers find more ways to use the Guided Reading Builder.

Headings: Pedagogical techniques, Working in PowerPoint, Digital didactics | Tags: constructors, simulators, templatesAssessment recommendation for Azure Migrate Discovery and Assessment - Azure Migrate

- Article

- Reading takes 8 minutes

Azure Migrate combines the tools you use to find, evaluate, and migrate applications, infrastructure, and workloads to Microsoft Azure. It includes Azure Migration tools and ISV offerings.

It includes Azure Migration tools and ISV offerings.

This article provides guidelines for creating assessments using the Azure Migrate Discovery and Assessment tool.

The assessments generated by Azure Migrate Discovery and Assessment are a point-in-time snapshot of data. There are four types of assessments that you can create with the Azure Migrate Discovery and Assessment tool:

| Assessment type | Details |

|---|---|

| Azure | Estimates for migrating on-premises servers to Azure VMs. This assessment type evaluates the ability to migrate on-premises VMware servers, Hyper-V environments, and physical servers to Azure. Additional information |

| Azure SQL; | Opportunity assessments for migrating on-premises SQL servers from a VMware environment to Azure SQL Database or Azure SQL Managed Instance. Read more Read more |

| Azure App Service | Evaluating the ability to migrate on-premises ASP.NET web applications running on an IIS web server from a VMware environment to Azure App Service. More |

| Azure VMware Solution (AVS) | Estimates for migrating on-premises servers to Azure VMware Solution (AVS). With this evaluation type, you can evaluate the feasibility of migrating to an Azure VMware Solution (AVS) for on-premises VMware VMs. Additional Information |

Note

If the number of Azure VMs or AVS ratings is incorrect in the Discovery and Scoring tool, click on the total number of ratings to return to all ratings and recalculate the number of Azure VMs or AVS ratings. The discovery and scoring tool will then display the correct numbers for that scoring type.

Sizing criteria

Sizing criteria options in Azure Migrate assessments:

| Sizing criteria | Details | Data |

|---|---|---|

| Based on performance | Estimates that make recommendations based on collected performance data. | Assessment for Azure VMs. The recommended virtual machine size is based on CPU and memory usage data. Disk type recommendations (Standard priced HDD or SSD, Premium priced Managed Disks, or Ultra priced drives) are based on IOPS and local disk throughput. SQL Azure score . Azure SQL configuration is based on SQL instance and database performance data, including CPU load, memory usage, IOPS (in data files and logs), throughput, and IO latency. Score for Azure VMware Solution. The recommended AVS node size is based on CPU and memory usage data. |

| Same as local | Estimates that do not use performance data to generate recommendations. | Assessment for Azure VMs. The recommended VM size is based on the size of the local VM. The recommended drive type depends on the value you selected for the storage type setting when you performed the assessment. Azure App Service score . Evaluation recommendations are based on on-premises web application configuration data. Score for Azure VMware Solution. The recommended size for AVS nodes is based on the size of the local virtual machine. |

Example

For example, if you have an on-premises VM with four cores at 20% usage and 8 GB of memory at 10% usage, the Azure VM score would be:

The Azure Migrate appliance continuously profiles on-premises and sends metadata and performance data to Azure. To evaluate the servers discovered by the device, follow these guidelines:

- Create assessments like you would in a local environment . You can create assessments just like on-premises once the servers are available in the Azure Migrate portal. You can create an Azure SQL scoring using the sizing criteria as you would on-premises. The default rating for Azure App Service is "Like on-premises".

- Create estimates based on performance . After setting up discovery, we recommend waiting at least one day before running a performance-based assessment:

- Collecting performance data takes some time. Waiting at least one day allows you to collect enough performance data points before running the assessment.

- When running performance-based assessments, be sure to profile your environment for a period equal to the assessment period. For example, if you are creating an assessment with a one-week performance analysis period, wait at least a week after you run a discovery to collect all the data points. Otherwise, the rating will not receive five stars.

- Recalculate grades . Because estimates are snapshots at a point in time, they do not automatically reflect the latest data. To update the estimate with the latest data, it must be recalculated.

For server assessments imported into Azure Migrate using a . csv file, follow these guidelines: You can create assessments just like on-premises once the servers are available in the Azure Migrate portal.

csv file, follow these guidelines: You can create assessments just like on-premises once the servers are available in the Azure Migrate portal.

FTT sizing options for AVS estimates

AVS uses the vSAN storage subsystem. vSAN storage policies define storage requirements for virtual machines. They guarantee the required level of service for virtual machines, as they determine how storage resources are allocated for a virtual machine. The following FTT-RAID combinations are available:

| Fault Tolerance (FTT) | RAID 9 configuration0012 | Minimum required number of nodes | Size recommendation |

|---|---|---|---|

| 1 | RAID-1 (mirroring) | 3 | A 100 GB virtual machine will consume 200 GB. |

| 1 | RAID-5 (Eraser Coding) | 4 | A 100 GB virtual machine will consume 133.33 GB. |

| 2 | RAID-1 (mirroring) | 5 | A 100 GB virtual machine will consume 300 GB. |

| 2 | RAID-6 (Eraser Coding) | 6 | A 100 GB virtual machine will consume 150 GB. |

| 3 | RAID-1 (mirroring) | 7 | A 100 GB virtual machine will consume 400 GB. |

Reliability guidelines

When performing performance-based ratings, the credibility score is rated from 1 (lowest) to 5 stars (highest). Effective use of confidence scores:

-

Azure VM and AVS scores require:

- CPU and memory usage data for each server;

- read/write throughput data for each drive connected to the local server;

- Information about incoming and outgoing network traffic for each network adapter connected to the server.

-

Azure SQL assessments require performance data for assessed instances and SQL databases, including the following:

- CPU and memory usage data;

- read/write throughput data for data and log files;

- I/O delay.

Depending on the percentage of data points available in the selected time period, the confidence score for the assessment is set according to the following table.

| Data point availability | Validation |

|---|---|

| 0-20% | 1 star |

| 21-40% | 2 stars |

| 41-60% | 3 stars |

| 61-80% | 4 stars |

| 81-100% | 5 stars |

Common evaluation problems

Let's look at solving some of the common environmental problems that affect evaluation.

Estimations out of sync

If you add or remove servers from the group after creating an estimate, the estimate you created will be marked as out of sync . Run the evaluation again ( recalculate ) to reflect the changes in the group.

Legacy scores

Azure VM score and AVS score

If there are changes to on-premises servers that are in the score group, the score will be flagged as obsolete . An estimate may be marked obsolete due to one or more changes to the following properties:

- Number of processor cores

- Dedicated memory

- Type of download or firmware

- Name, version, and architecture of the operating system

- Number of discs

- Number of network adapters

- Disk size (number of allocated GB)

- Update network adapter properties. Example: change MAC address, add IP address, etc.

Run the evaluation again ( recalculate ) to reflect the change.

Azure SQL score

If there are changes to the local instances and SQL databases that are in the score group, the score will be tagged as obsolete . An assessment can be marked stale for one or more of the following reasons:

-

the SQL instance was added to or removed from the server;

-

The SQL database was added to or removed from the SQL instance;

-

The total size of the database in the SQL instance has changed by more than 20%.

-

change in the number of processor cores;

-

change of allocated memory.

Run the evaluation again ( recalculate ) to reflect the changes.

Azure App Service score

If there are changes to on-premises web applications that are in the score group, the score will be marked as obsolete . An assessment may be marked as obsolete for one or more of the following reasons:

-

Web applications have been added to or removed from the server.

-

Configuration changes made to existing web applications.

Run the evaluation again ( recalculate ) to reflect the changes.

Low confidence scores

Not all data points may be available during the estimation process for the following reasons:

-

The environment was not profiled for the time period for which the estimate is being generated. For example, if you are creating an assessment with a performance analysis period of one week, you must wait at least a week after you run a discovery to collect all data points. If you can't take a break, change the performance period to a shorter one and recalculate the score.

-

The evaluation function will not be able to collect performance data for some or all servers during the evaluation period. For an estimate with high confidence, the following requirements must be met.

- The servers are on during the evaluation.

- Outgoing connections to ports 443 are allowed.

- Dynamic memory is enabled for Hyper-V servers.

- Verify that the agents in Azure Migrate have a status of Connected and check the latest heartbeat.

- Verify that for Azure SQL assessments, the Azure Migrate connection for all SQL instances has a status of "Connected" in the Discovered SQL Server Instance blade.

Recalculate the score to reflect recent changes in the confidence score.

-

Multiple servers were created for Azure VM and AVS assessments after discovery started. For example, you create a performance log estimate for the last month, but several servers were created in this environment only a week ago. In this case, performance data for new servers will not be available for the entire analysis period, and the confidence score will be low.

-

While evaluating Azure SQL, some SQL instances or databases were created after discovery started. For example, you create a performance log estimate for the last month, but multiple SQL instances or databases were created in this environment only a week ago.