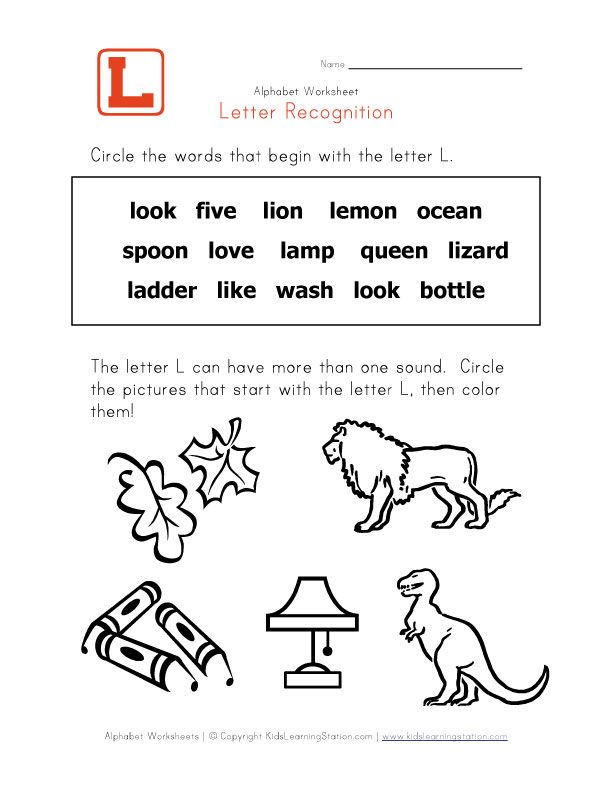

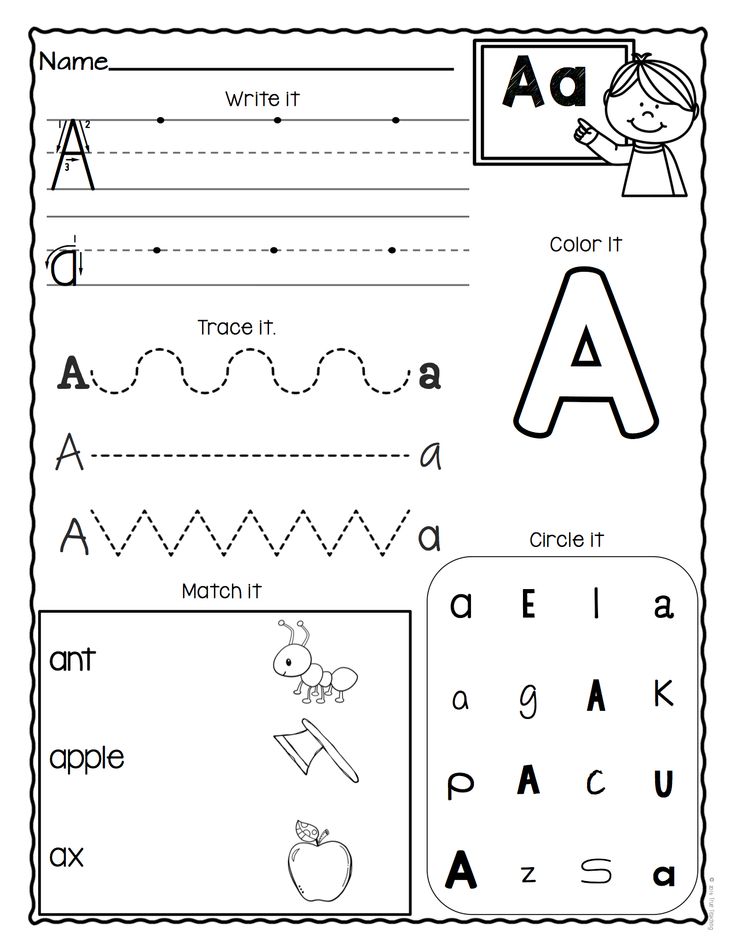

Kindergarten letter recognition

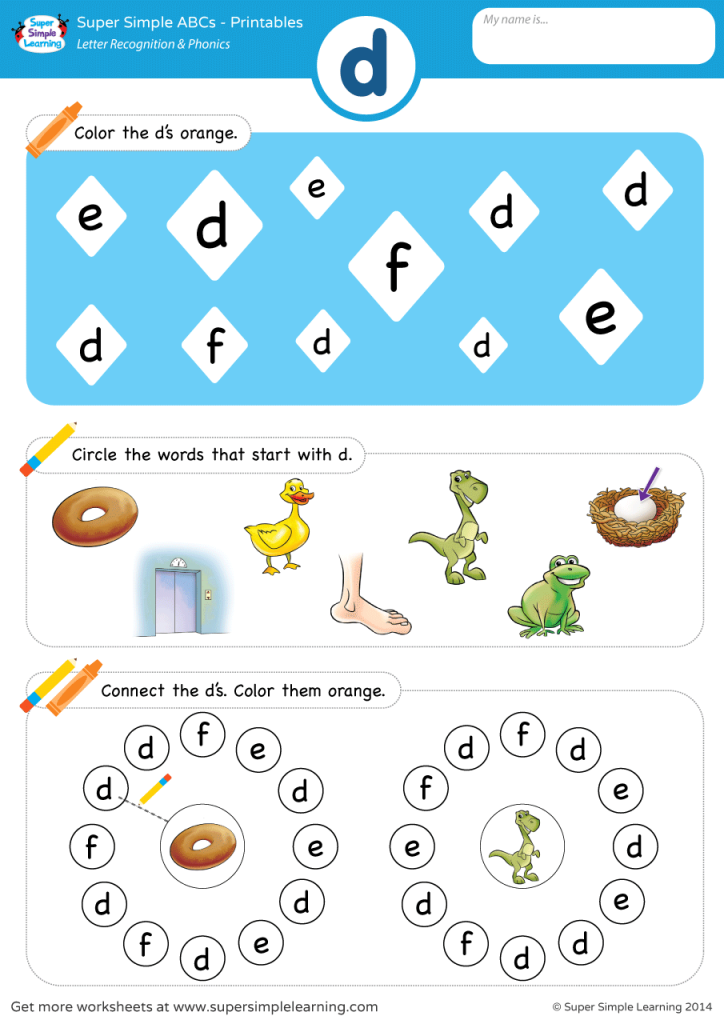

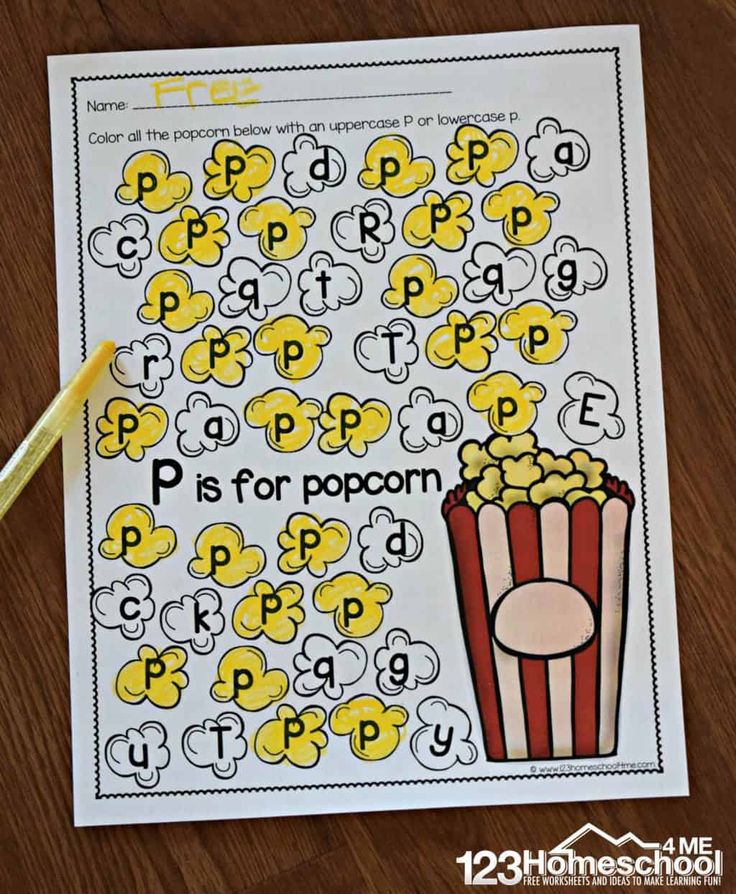

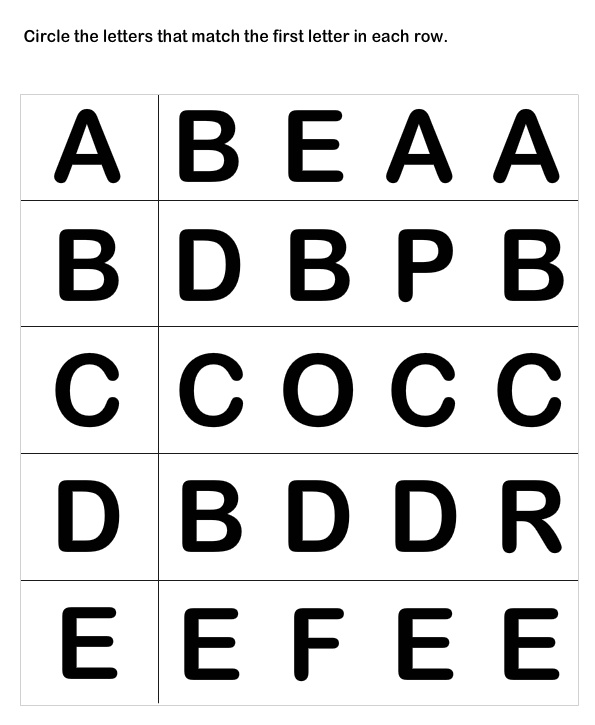

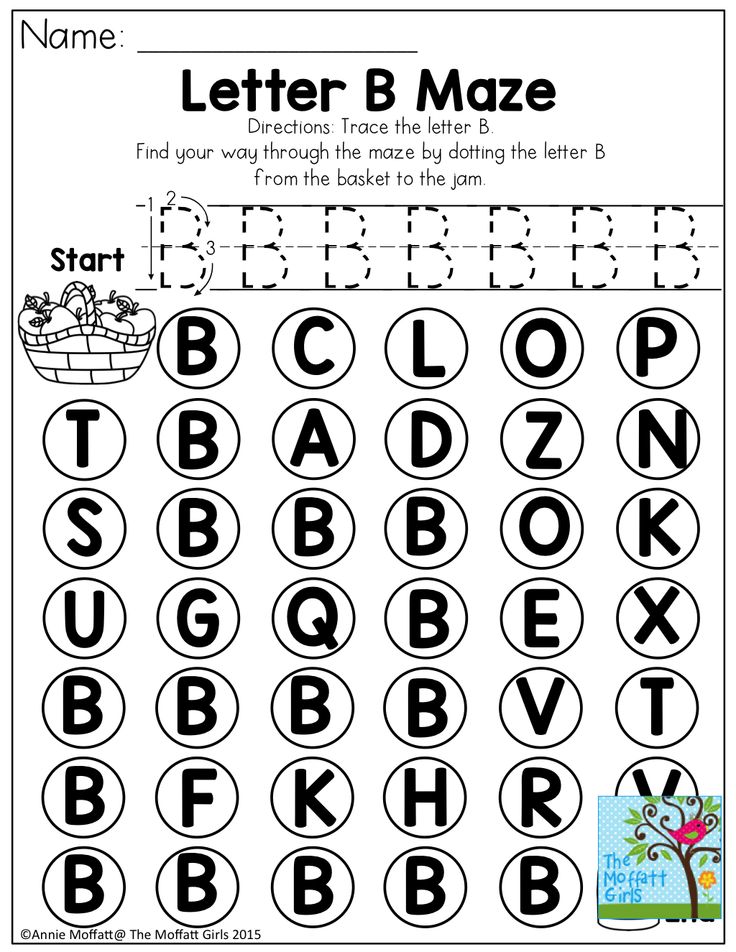

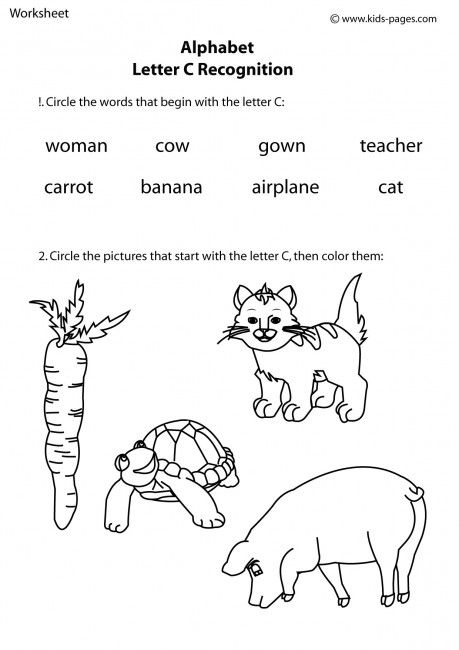

10 Simple Letter Recognition Activities for Kindergarten

One thing I distinctly remember from when I was a Kindergartener, was playing with the alphabet magnets on a magnetic easel. When I was in Kindergarten, most of what I learned throughout the year was letters and their sounds through lots of letter recognition activities. These days, most Kindergarteners come in knowing all their letters and the majority of their sounds. This doesn't mean we don't have to worry about letter recognition! It's important to continually teach the letters and their sounds to start building their fluency and prepare them for the first steps of learning how to read.

Teachers are usually split on how to teach letter recognition and sounds in Kindergarten. Some prefer to focus on one letter per week, teach the sound, as well as focus on other phonics skills. Many curriculums are laid out this way. Other teachers prefer the “boot camp” style- teaching one letter per day for the first 26 days of school.

It really depends on your school and class with how you teach letters. I prefer to do a week or two of just letter recognition, then three to four weeks of focusing on letter sounds, each day focusing on one to two sounds. There is no “right” or “wrong” way to teach letters and sounds, just as long as you spend time reviewing and practicing them. Today, I'm sharing 10 simple letter recognition activities for Kindergarten with you!

1. Pocket Chart Games

If you have a pocket chart and some alphabet flashcards, you're in luck! There are plenty of ways to practice naming letters with a pocket chart. You could play memory, where students have to match the uppercase to the lowercase letter. You can hide an object behind a flashcard and have students try to find it, by saying the letter name of each card. Another idea is to use letter cards to spell the names of our classmates.

2. Play Dough Letters

Fine motor skills are SO important to continue to develop in Kindergarten. Playdough is undoubtedly my favorite way to add in a little fine motor practice plus it's fun! Have students roll out the play dough like a snake to make different letters. You can display the letter on the board, or use a playdough mat like this one found here: Phonics Play Dough Mats.

Playdough is undoubtedly my favorite way to add in a little fine motor practice plus it's fun! Have students roll out the play dough like a snake to make different letters. You can display the letter on the board, or use a playdough mat like this one found here: Phonics Play Dough Mats.

3. “I'm thinking of a letter…”

This guessing game is a fun way to practice the various attributes of a letter! Your students will try to guess what letter you are thinking of by giving them clues, and then letting them guess. For example, “I'm thinking of a letter that is a vowel. It looks the same both uppercase and lowercase. It does not have straight lines.” And you may have guessed I was thinking of the letter O!

4. Alphabet Sort

Using stamps, letter magnets, or even a pencil, have students sort letters by their different attributes, by vowels and consonants, or if it's in the students' name. You can grab this freebie here: Free Alphabet Sorting Mats.

5. Play a song

Did you know that songs help kids learn? Hearing that rhythm and rhyme helps skills stick in their brains longer. Think about how you get those songs stuck in your head, even from 10 years ago! You can find a whole playlist of songs to practice letters here.

Think about how you get those songs stuck in your head, even from 10 years ago! You can find a whole playlist of songs to practice letters here.

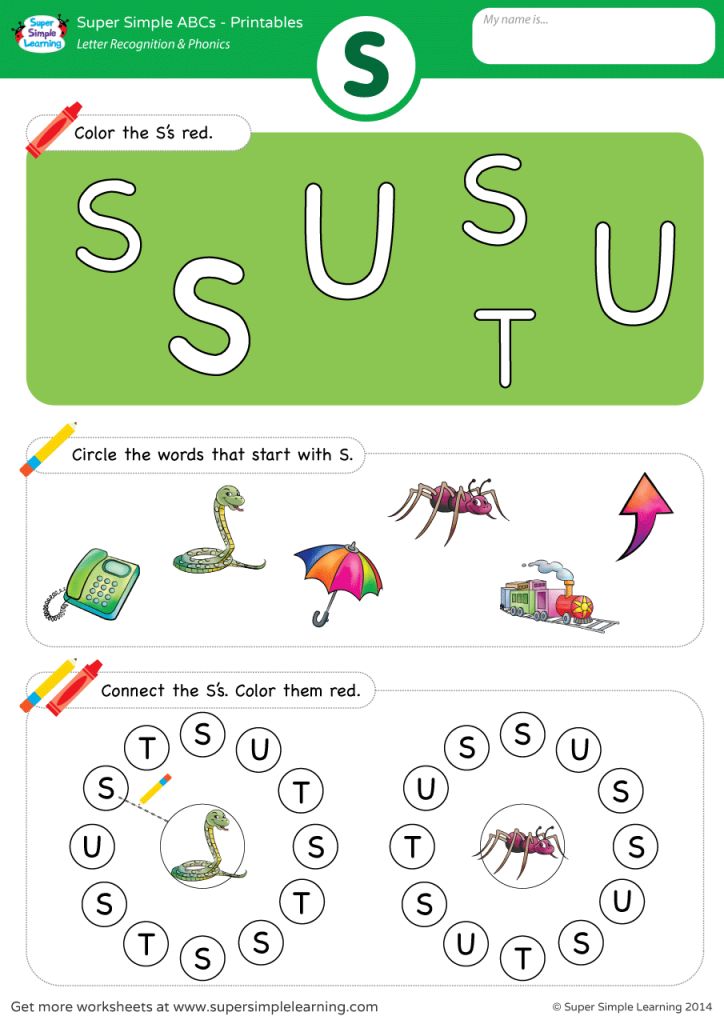

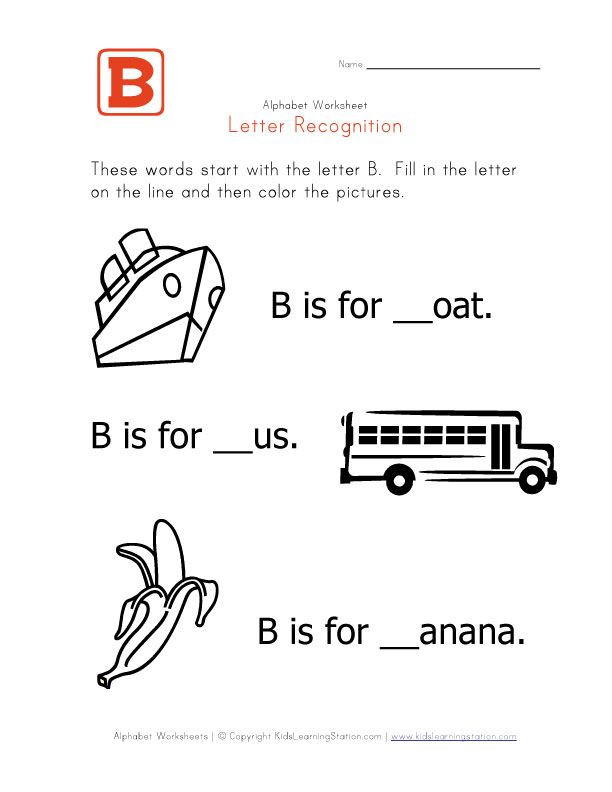

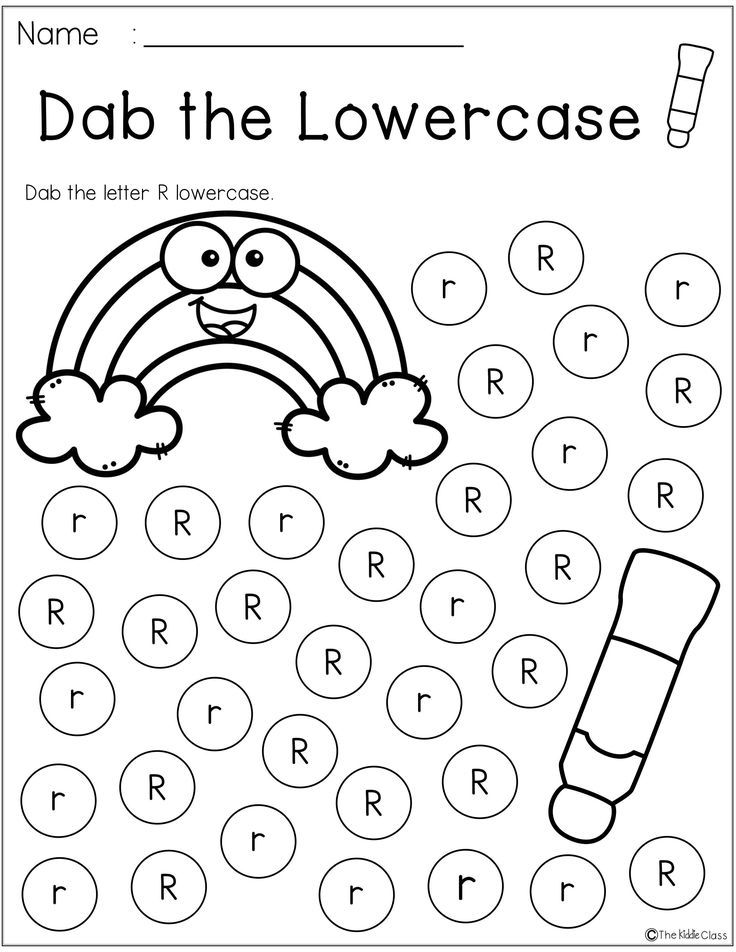

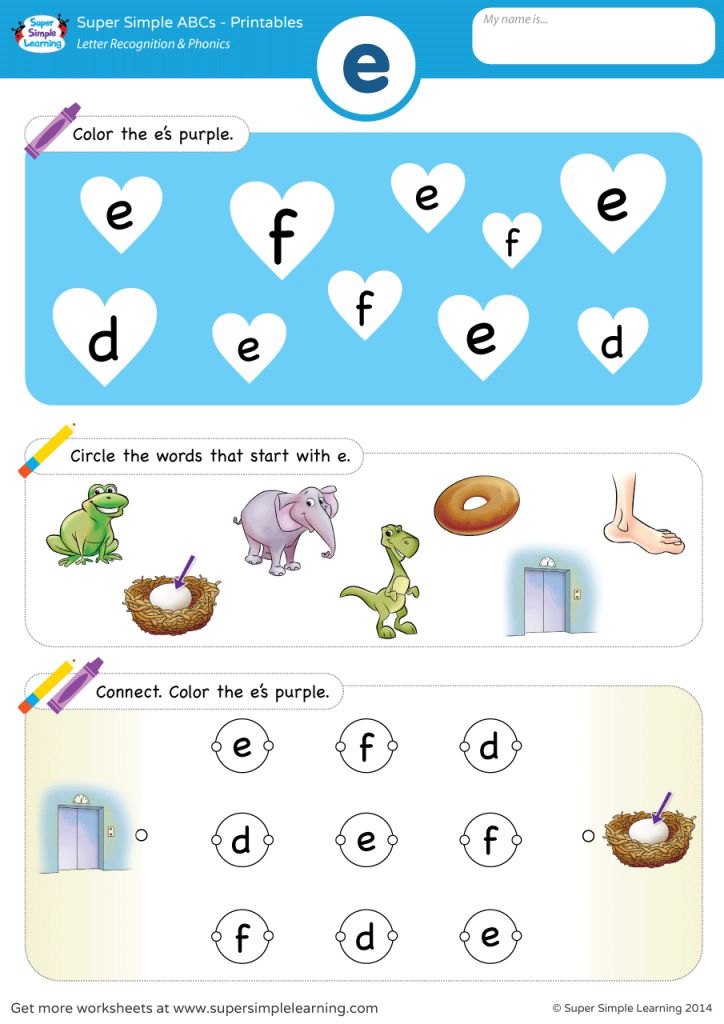

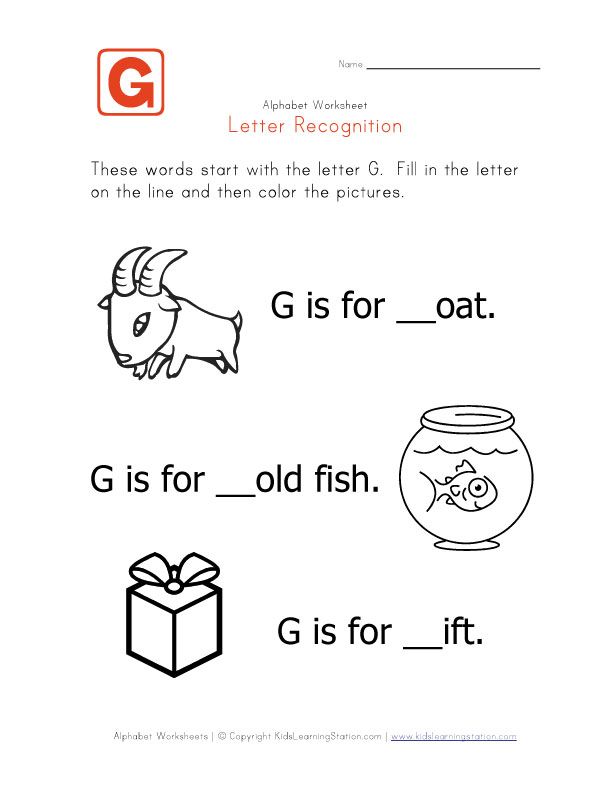

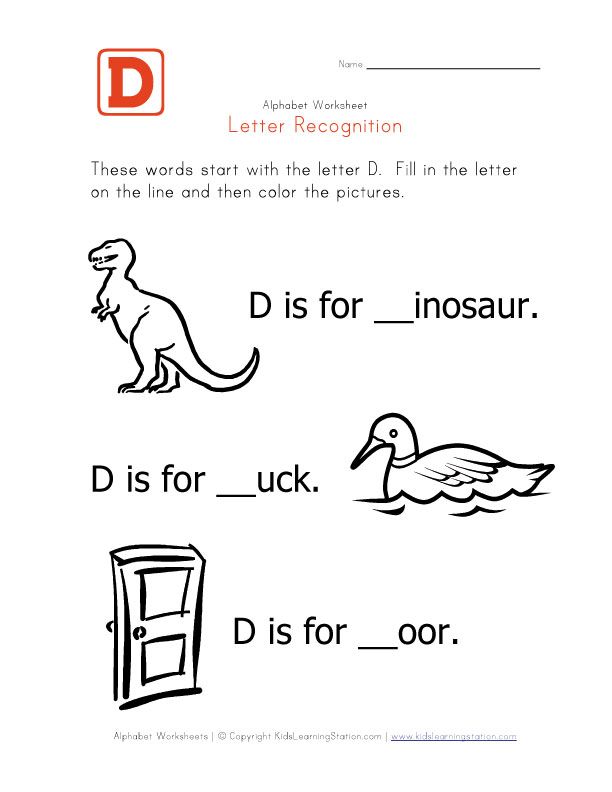

6. Letter Crafts

This makes an adorable book that students can take home once they are finished! We did these letter crafts as part of our Fun Friday centers every week. At the beginning of the year, I did a lot of modeling and we did the first few together. After a few letters, they were able to do it just by looking at the picture sample.

7. Read a book

Reading is one of the best ways to add in some variety to your phonics lessons! Grab an alphabet book and have students go on a letter book hunt while you read. Pause when you come to a letter and have the students shout it out.

Check out my post on books that teach letter recognition and sounds here.

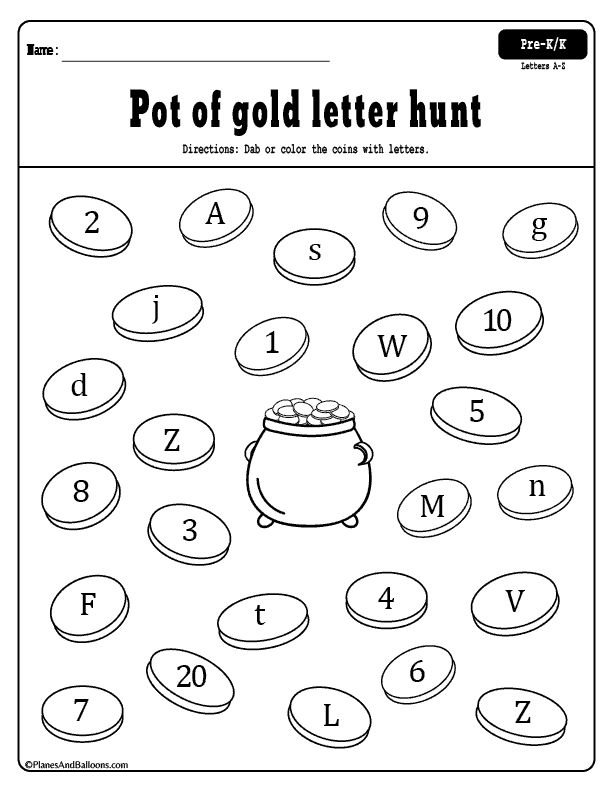

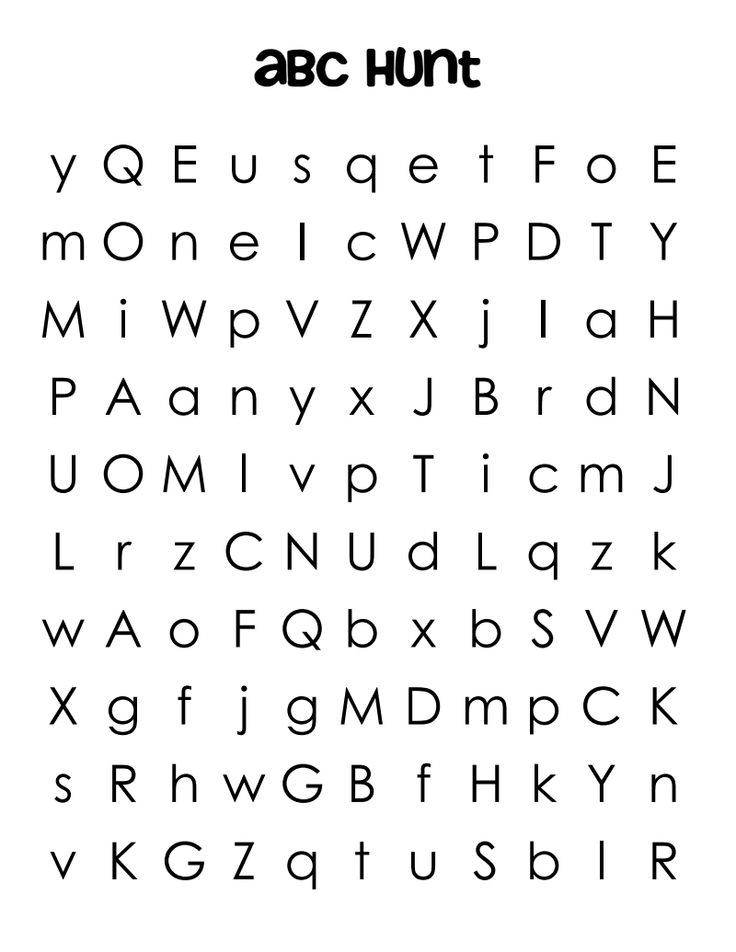

8. Letter Hunt

Use what's already in your classroom to go on a letter hunt! Have students pick a letter card out of a basket and go “hunt” for that letter. They can find it on a poster, book cover, friend's name, anywhere! Once they find one, they stop where they're at until all friends have found a letter.

They can find it on a poster, book cover, friend's name, anywhere! Once they find one, they stop where they're at until all friends have found a letter.

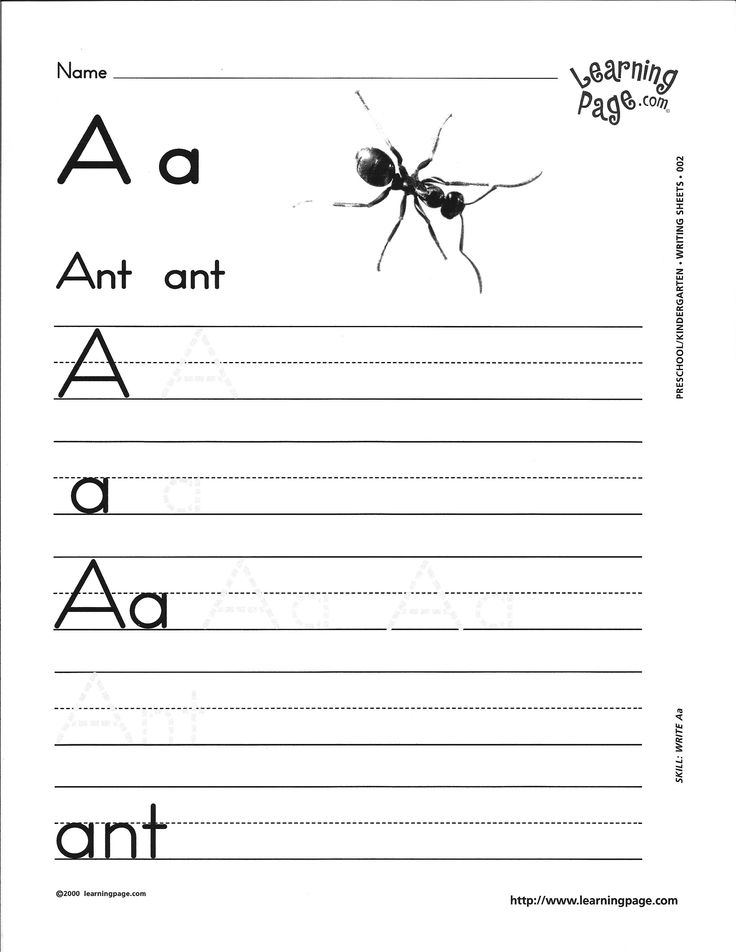

9. Write and Wipe

Give each of your students a whiteboard and dry erase marker. Call out a letter and have students write it on their board and hold it up to show you. You can quickly check and see how your students know each specific letter.

10. Shaving cream letters

Squirt a little bit of shaving cream on each student's desk and have them practice writing letters! You can call the letter out to write or write it on the board and have them copy it. Bonus: this makes your classroom smell amazing and cleans the desks!

More Letter Recognition Activities for Kindergarten

If you're looking for a simple, all in one guide to teaching Letter Recognition and Sounds, check out the Kindergarten Phonics Curriculum Unit 1: Letter Recognition and Sounds. This includes a six-week unit overview, phonemic awareness warm-ups, detailed lesson plans, center activities like the play dough mat above, worksheets, and more!

What letter recognition activities for Kindergarten would you add to this list?

11 Easy Letter Recognition Centers for Kindergarten

Centers

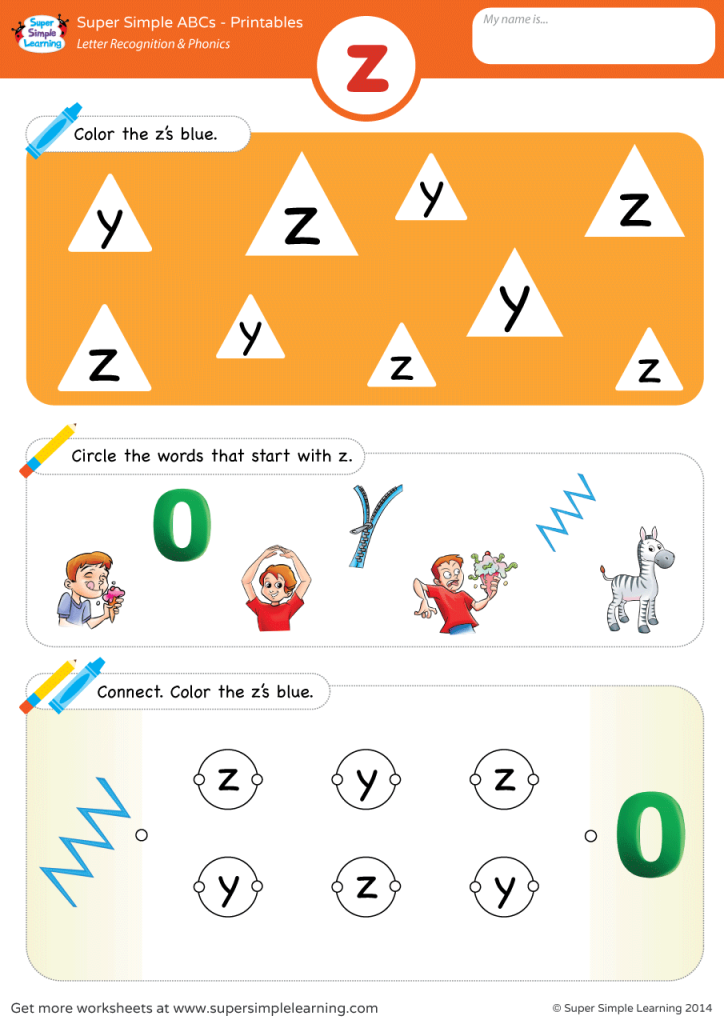

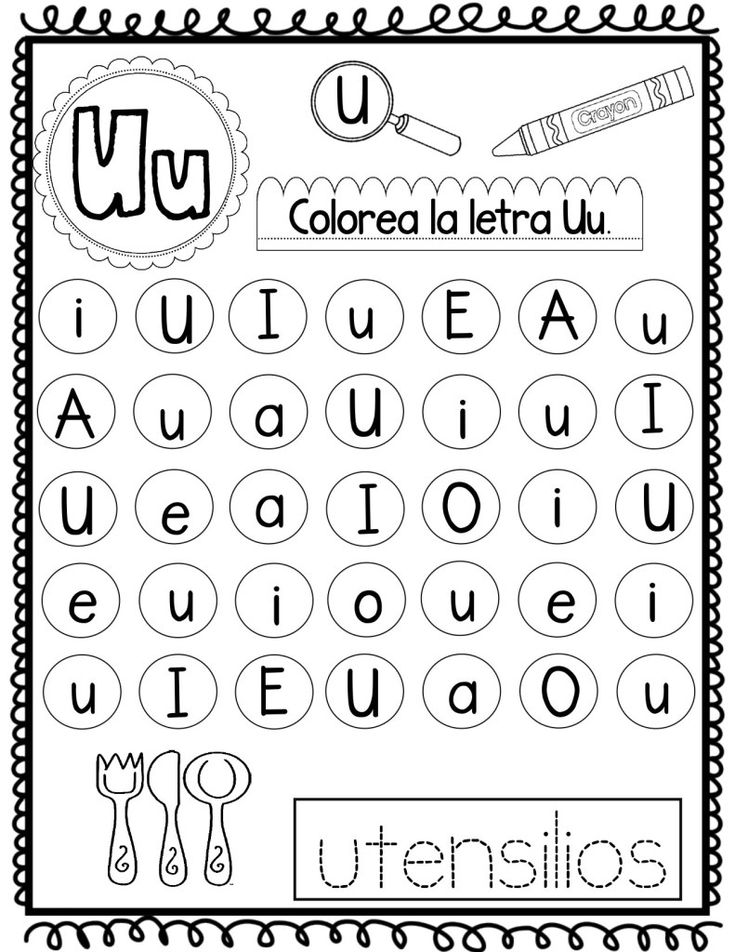

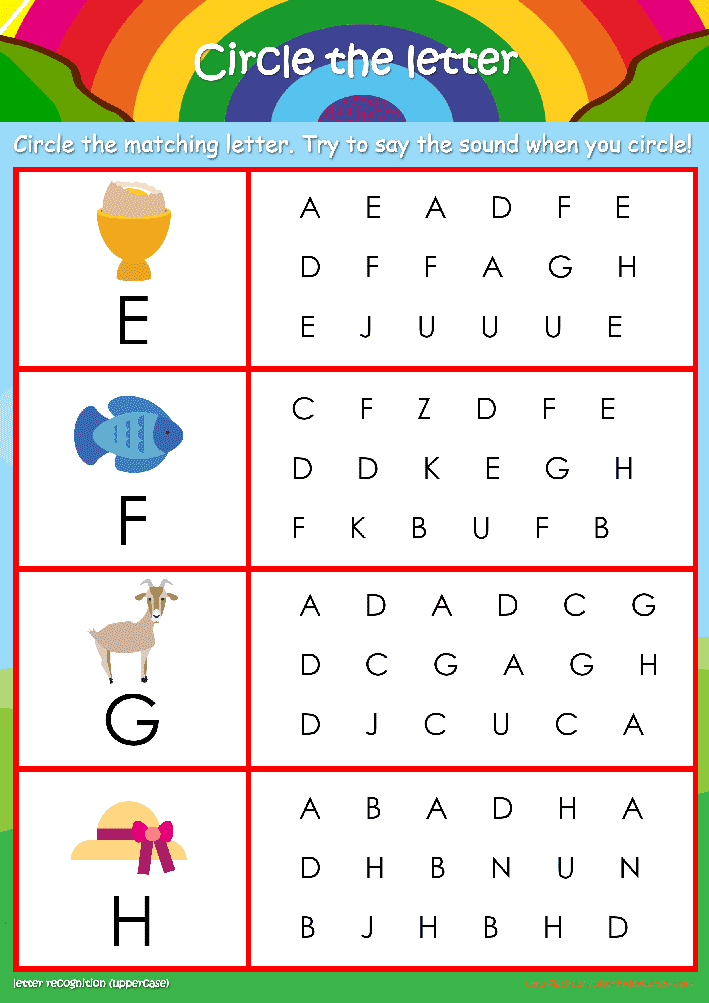

As a kindergarten teacher, you eat, breathe, and sleep letters. Learning to recognize letters is one of the most pivotal skills students learn in kindergarten. To help you freshen up your literacy centers, read on for 11 easy letter recognition activities for kindergarten.

Learning to recognize letters is one of the most pivotal skills students learn in kindergarten. To help you freshen up your literacy centers, read on for 11 easy letter recognition activities for kindergarten.

This article, along with many other articles on The Printable Princess website, contains Amazon affiliate links. If you purchase through the links I earn a small commission. We only share links to things that we love.

Letter Recognition Activities for Kindergarten

Learning to recognize letters is the most basic literacy skill in which all other literacy and reading skills are built. Put simply, it’s super important for students!

It’s important to work letter recognition activities into your daily routine. However, doing the same activities and letter recognition centers over and over will lead to boredom for your students faster than you can say “school’s out!”

Beat the boredom and master those letters with these 11 letter recognition activities for kindergarten.

#1. Alphabet Tic-Tac-Toe

For this center activity, pair students up into partners. Give each pair a basic tic-tac-toe board or draw one on a whiteboard.

You can prep mini tic-tac-toe boards by folding a piece of card stock into fourths and creating a board on each fourth. Laminate and cut them apart to use time and again.

Each partner will be assigned a letter to mark their spaces. Each time they lay their magnetic letter or write it with dry-erase marker, they must say the letter name. Play continues until the first student gets 3 in a row to win.

You can also have students use the same letter, with one student being uppercase and the other student being lowercase.

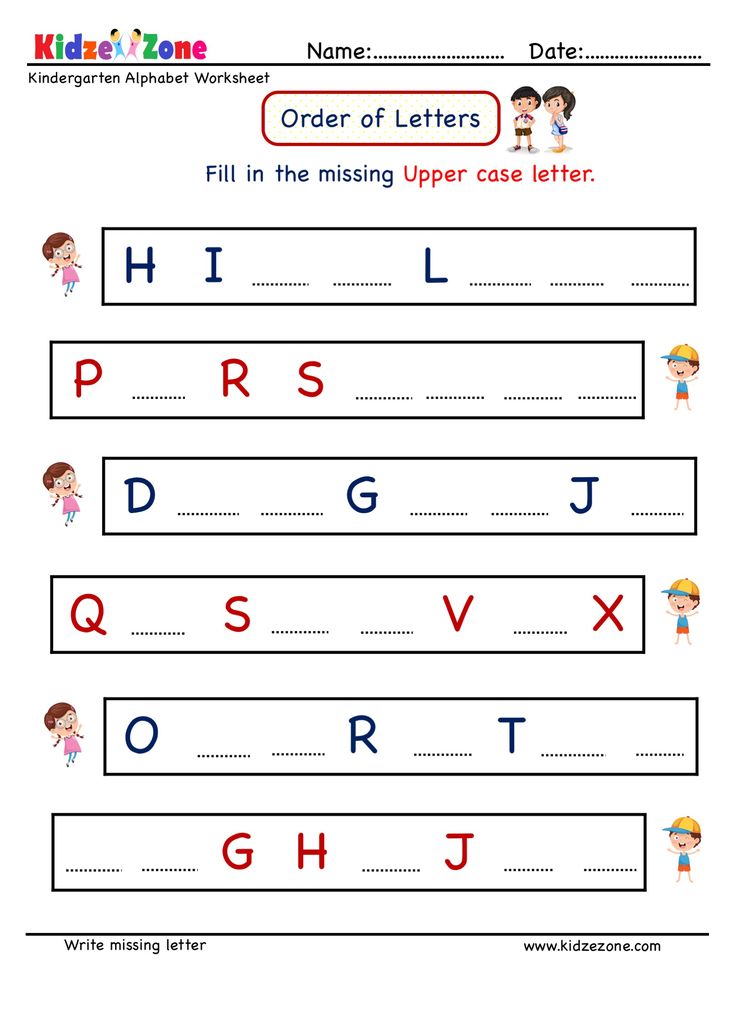

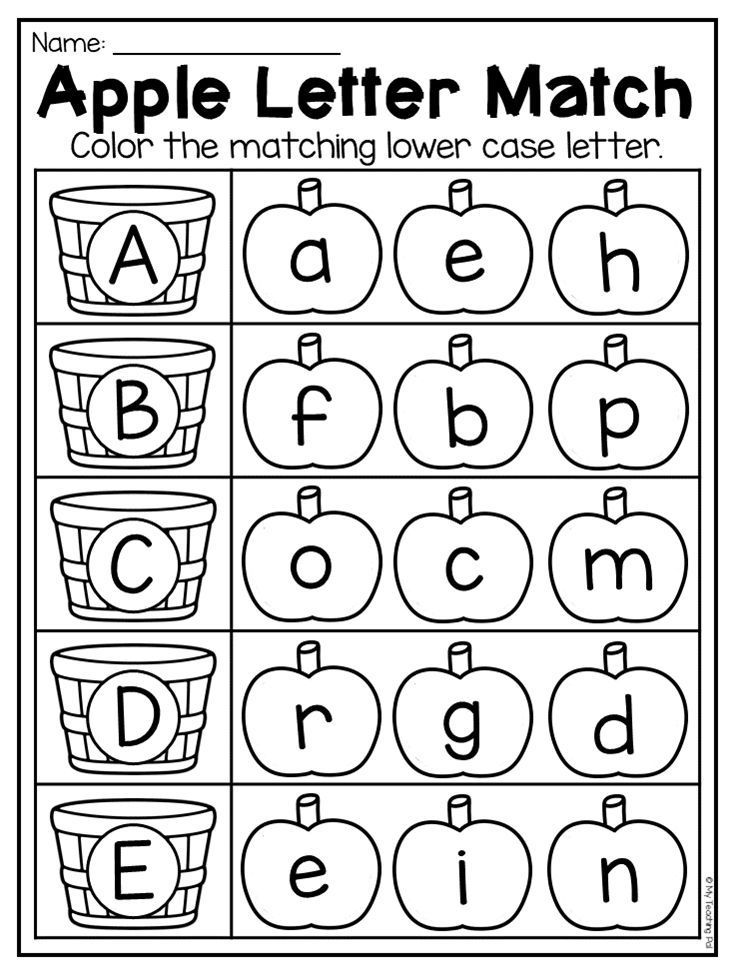

#2. Letter Match

This letter recognition activity for kindergarten is a great letter recognition and team building center. To play, mix up uppercase and lowercase letter tiles or letter cards and place them in a pile.

Have students work together in a small group to match the uppercase and lowercase letters together. To take it a step further, challenge students to place the uppercase and lowercase letter matches in order from A-Z.

To take it a step further, challenge students to place the uppercase and lowercase letter matches in order from A-Z.

#3. Hidden Letters

Hide magnetic letters in a tub of sensory materials such as cereal, rice, noodles, etc. Give students a pair of jumbo tweezers and have them take turns grabbing a hidden letter with the tweezers, identifying the letter, and saying the letter name aloud.

This letter recognition center is perfect for incorporating fine motor skills and sensory learning into the day.

#4. Heads Up

For this center, students will play with a partner. Each pair will need a whiteboard and dry-erase marker. The first student will write a letter on the whiteboard without showing their partner.

They’ll pass the whiteboard to their partner, without the partner looking at the letter. The partner will hold the whiteboard up to their forehead, facing their partner.

The student that wrote the letter will give hints about the letter to their partner until the partner guesses the letter. Hints can be about the shape of the letter, whether it’s a vowel or consonant, if someone’s name begins with it, or a beginning sound word clue.

Hints can be about the shape of the letter, whether it’s a vowel or consonant, if someone’s name begins with it, or a beginning sound word clue.

After the student has made 5 guesses or guessed correctly, the letter will be revealed and then the next student will choose the letter and give hints to play another round.

#5. Letter Toss

For this letter recognition activity for kindergarten, use a dry-erase cube to write letters on all sides. Students will take turns rolling the cube, saying the letter, and writing it on a whiteboard in both uppercase a lowercase. Play continues to the next student.

To focus on only one letter at a time, you can write the same letter in uppercase and lowercase on the cube and have students identify the letter and whether it is uppercase or lowercase before they write it.

You can also use color-coded letter cards and corresponding recording sheets like the ones found in this roll and color activity.

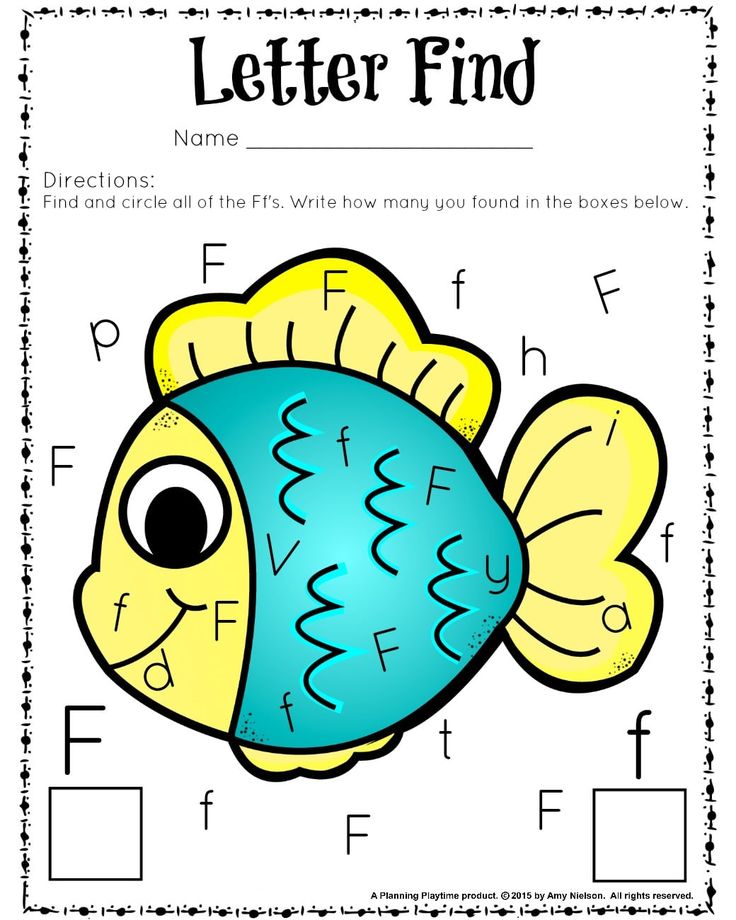

#6. Find the Letter

This easy letter recognition center will be a blast for your students and help them think on their feet. To play, spread magnetic letters or letter cards out on a table or on the floor.

To play, spread magnetic letters or letter cards out on a table or on the floor.

Call out a letter and have students race to find it in the pile. The first student to find it gets to keep the letter. The student with the most letters at the end wins. You can also have students take turns finding the letters instead of racing to find them.

This would make a great small group game. Follow it up with an independent practice activity where students work on finding letters and reading left to right with individual find the letter activity pages.

#7. Magazine Hunt

Choose a few letters that you want your students to work on. Divide a large piece of chart paper into that number of sections and label the top of each section with a letter, written in both uppercase and lowercase.

Give one piece of chart paper to each small group of 4-5 students. Supply students with magazines, scissors, and glue. To practice, students will look through the magazines, cut out letters that match one of the sections on the chart paper, and glue them in the appropriate section.

To save yourself prep time, you can make one chart paper and have all students rotate to this letter recognition center to work together to fill it up. Next time you use the center, you can use a new chart paper with new letters.

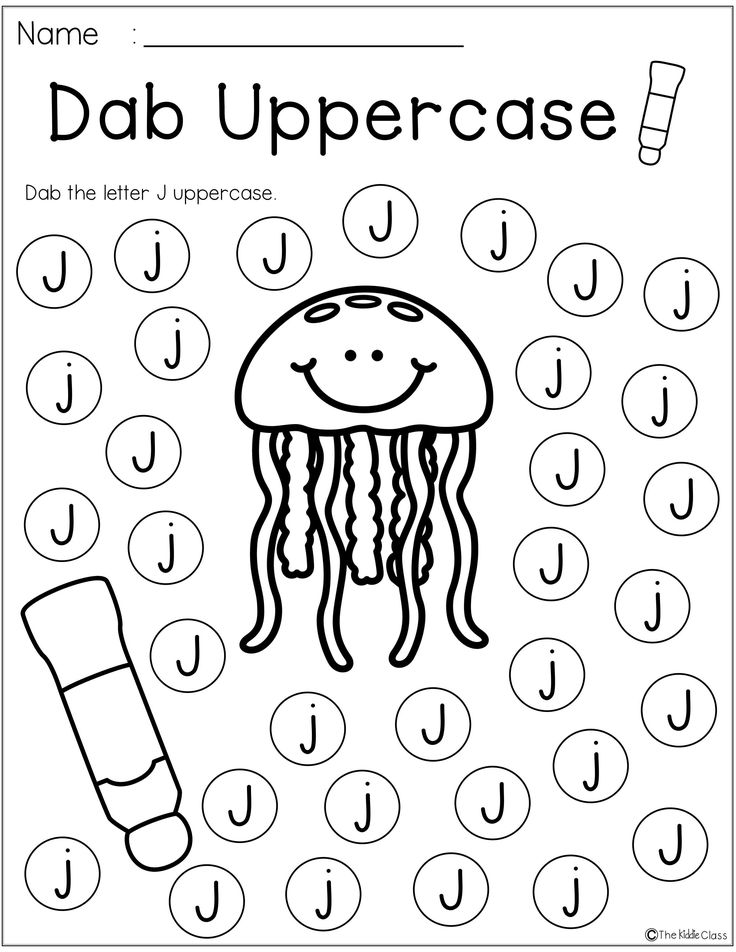

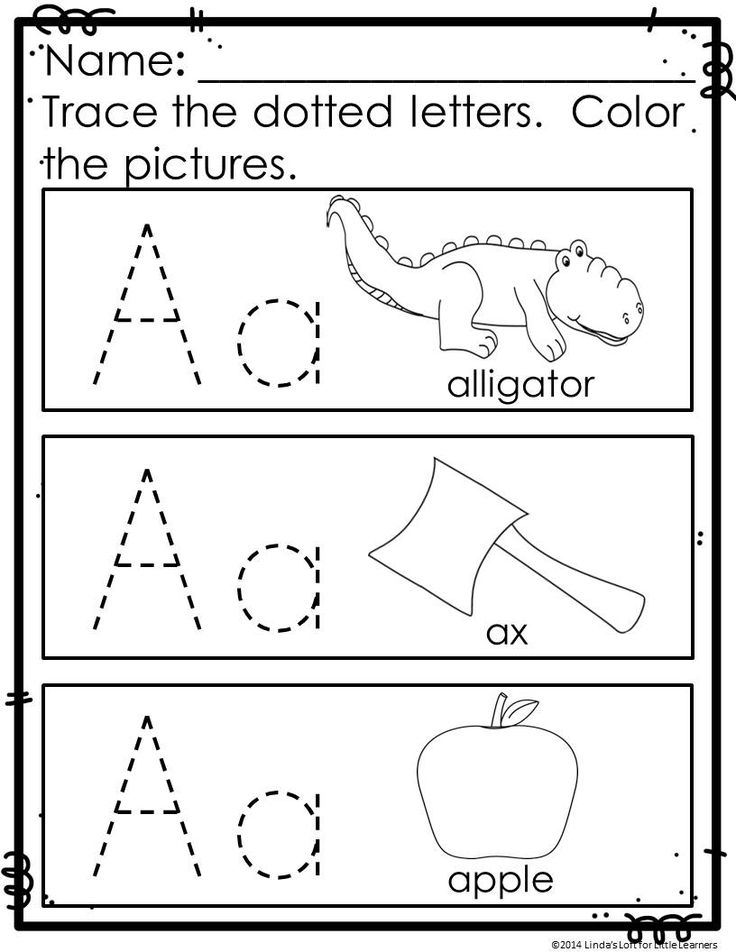

#8. Sticker Letters

Write an uppercase and lowercase letter pair in large letters on a piece of white paper. Make copies for each student. Students will practice forming the letters by using small stickers to place on top of the letters.

This is great for fine motor practice as they practice peeling off each sticker and sticking it to the letter. If they finish early they can rainbow write the letter on the back of the page or draw pictures that start with that letter, working on beginning letter sounds.

#9. Clip and Match

To prepare this activity, write different sets of uppercase and lowercase letters on sentence strips. Write the letter 3 times on each strip. For example, one strip may have B, B, B and another may have b, b, b.

Mix up the sentence strips and provide students with clothespins. Students will work to find an uppercase and lowercase sentence strip that match and clothespin them together.

For extra fine motor practice, they can clip over top of each letter, using 3 clothespins per match and saying the letter each time they clip.

#10. Spoon Match Up

This easy, DIY letter recognition center requires nothing more than a set of white plastic spoons, a set of clear plastic spoons, and a permanent marker.

To prep, write one letter on the top of a white plastic spoon and the corresponding uppercase/lowercase letter on the bottom of a clear plastic spoon with a permanent marker.

Continue until all letters have been written on both sets of spoons. You can prep letters A-Z and use them all or sort them into smaller groups of letters to focus on fewer letters at a time.

Mix up the spoons and have students find the matching white and clear spoons and put them together so they can see both letters and make a match.

#11. Click and Listen Boom Cards

This letter recognition center for kindergarten would be perfect for an independent center or technology center. These Click and Listen Letter Identification Boom Cards provide students with an interactive way to practice hearing the letter sound and choosing the corresponding letter.

There are 2 decks for letter identification and 2 decks for letter sound identification included, giving students ample opportunities to practice. These are self-checking, have audio directions and letter names or sounds depending on the deck, and require no prep.

I hope these 11 letter recognition center ideas have given you some inspiration to try something new with your students to practice learning letters.

If you would love even more letter recognition activities for kindergarten, check out my Endless Letters and Letter Sounds Bundle. This bundle is always growing and getting new activities added to it, so you’ll never run out of fun ways to practice!

Post Tags: #alphabet#centers#DIY centers#letter recognition#literacy centers

Similar Posts

do text recognition in half an hour / Sudo Null IT News

Hello Habr.

After experimenting with a well-known database of 60,000 MNIST handwritten digits, a logical question arose whether there is something similar, but with support for not only numbers, but also letters. As it turned out, there is, and such a database is called, as you might guess, Extended MNIST (EMNIST).

If anyone is interested in how this base can be used to make a simple text recognition, welcome under cat.

Note : This is an experimental and educational example, I was just curious to see what happens. I did not plan and do not plan to make a second FineReader, so many things here, of course, are not implemented. Therefore, claims in the style of “why”, “there is already better”, etc., are not accepted. Probably ready-made OCR libraries for Python already exist, but it was interesting to do it yourself. By the way, for those who want to see how the real FineReader was made, there are two articles in their blog on Habré for 2014: 1 and 2 (but of course, without source codes and details, as in any corporate blog). Well, let's get started, everything is open here and everything is open source.

Well, let's get started, everything is open here and everything is open source.

Let's take plain text as an example. Like this:

HELLO WORLD

And let's see what we can do with it.

Breaking text into letters

The first step is to break the text into individual letters. OpenCV is useful for this, or rather its findContours function.

Open the image (cv2.imread), convert it to b/w (cv2.cvtColor + cv2.threshold), slightly enlarge it (cv2.erode) and find the contours.

image_file = "text.png" img = cv2.imread(image_file) gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY) img_erode = cv2.erode(thresh, np.ones((3, 3), np.uint8), iterations=1) # Get contours contours, hierarchy = cv2.findContours(img_erode, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE) output = img.copy() for idx, contour in enumerate(contours): (x, y, w, h) = cv2.boundingRect(contour) # print("R", idx, x, y, w, h, cv2.contourArea(contour), hierarchy[0][idx]) # hierarchy[i][0]: the index of the next contour of the same level # hierarchy[i][1]: the index of the previous contour of the same level # hierarchy[i][2]: the index of the first child # hierarchy[i][3]: the index of the parent if hierarchy[0][idx][3] == 0: cv2.rectangle(output, (x, y), (x + w, y + h), (70, 0, 0), 1) cv2.imshow("Input", img) cv2.imshow("Enlarged", img_erode) cv2.imshow("output", output) cv2.waitKey(0)

We get a hierarchical contour tree (parameter cv2.RETR_TREE). The first is the general outline of the picture, then the outlines of the letters, then the internal outlines. We only want the outlines of the letters, so I make sure the "parent" is the overall outline. This is a simplistic approach, and it may not work for real scans, although this is not critical for screenshot recognition.

Result:

The next step is to save each letter, after scaling it to a 28x28 square (this is the format in which the MNIST database is stored). OpenCV is built on top of numpy so we can use array functions for cropping and scaling.

OpenCV is built on top of numpy so we can use array functions for cropping and scaling.

def letters_extract(image_file: str, out_size=28) -> List[Any]: img = cv2.imread(image_file) gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY) img_erode = cv2.erode(thresh, np.ones((3, 3), np.uint8), iterations=1) # Get contours contours, hierarchy = cv2.findContours(img_erode, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE) output = img.copy() letters = [] for idx, contour in enumerate(contours): (x, y, w, h) = cv2.boundingRect(contour) # print("R", idx, x, y, w, h, cv2.contourArea(contour), hierarchy[0][idx]) # hierarchy[i][0]: the index of the next contour of the same level # hierarchy[i][1]: the index of the previous contour of the same level # hierarchy[i][2]: the index of the first child # hierarchy[i][3]: the index of the parent if hierarchy[0][idx][3] == 0: cv2. rectangle(output, (x, y), (x + w, y + h), (70, 0, 0), 1) letter_crop = gray[y:y + h, x:x + w] # print(letter_crop. shape) # Resize letter canvas to square size_max = max(w, h) letter_square = 255 * np.ones(shape=[size_max, size_max], dtype=np.uint8) if w > h: # Enlarge image top-bottom # ------ # ====== # ------ y_pos = size_max//2 - h//2 letter_square[y_pos:y_pos + h, 0:w] = letter_crop elif w < h: # Enlarge image left-right # --||-- x_pos = size_max//2 - w//2 letter_square[0:h, x_pos:x_pos + w] = letter_crop else: letter_square = letter_crop # Resize letter to 28x28 and add letter and its X-coordinate letters.append((x, w, cv2.resize(letter_square, (out_size, out_size), interpolation=cv2.INTER_AREA))) # Sort array in place by X-coordinate letters.

rectangle(output, (x, y), (x + w, y + h), (70, 0, 0), 1) letter_crop = gray[y:y + h, x:x + w] # print(letter_crop. shape) # Resize letter canvas to square size_max = max(w, h) letter_square = 255 * np.ones(shape=[size_max, size_max], dtype=np.uint8) if w > h: # Enlarge image top-bottom # ------ # ====== # ------ y_pos = size_max//2 - h//2 letter_square[y_pos:y_pos + h, 0:w] = letter_crop elif w < h: # Enlarge image left-right # --||-- x_pos = size_max//2 - w//2 letter_square[0:h, x_pos:x_pos + w] = letter_crop else: letter_square = letter_crop # Resize letter to 28x28 and add letter and its X-coordinate letters.append((x, w, cv2.resize(letter_square, (out_size, out_size), interpolation=cv2.INTER_AREA))) # Sort array in place by X-coordinate letters. sort(key=lambda x: x[0], reverse=False) return letters

sort(key=lambda x: x[0], reverse=False) return letters At the end, we sort the letters by X-coordinate, just as you can see, we store the results as a tuple (x, w, letter) so that we can then extract spaces from the spaces between the letters.

Making sure everything works:

cv2.imshow("0", letters[0][2]) cv2.imshow("1", letters[1][2]) cv2.imshow("2", letters[2][2]) cv2.imshow("3", letters[3][2]) cv2.imshow("4", letters[4][2]) cv2.waitKey(0) The letters are ready for recognition, we will recognize them using a convolutional network - this type of network is well suited for such tasks.

Neural network (CNN) for recognition

The original EMNIST dataset has 62 different symbols (A..Z, 0..9 etc):

emnist_labels = [48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122]

The neural network, respectively, has 62 outputs, at the input it will receive images 28x28, after recognizing "1" it will be at the corresponding output of the network.

Creating a network model.

from tensorflow import keras from keras.models import Sequential from keras import optimizers from keras.layers import Convolution2D, MaxPooling2D, Dropout, Flatten, Dense, Reshape, LSTM, BatchNormalization from keras.optimizers import SGD, RMSprop, Adam from keras import backend as K from keras.constraints import maxnorm import tensorflow as tf def emnist_model(): model = Sequential() model.add(Convolution2D(filters=32, kernel_size=(3, 3), padding='valid', input_shape=(28, 28, 1), activation='relu')) model.add(Convolution2D(filters=64, kernel_size=(3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(512, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(len(emnist_labels), activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adadelta', metrics=['accuracy']) return model

As you can see, this is a classic convolutional network that highlights certain features of the image (the number of filters is 32 and 64), to the “output” of which is connected the “linear” MLP network, which forms the final result.

Neural network training

Let's move on to the longest stage - training the network. To do this, we will take the EMNIST database, which can be downloaded from the link (archive size 536Mb).

To read the database, use the idx2numpy library. Prepare data for training and validation.

import idx2numpy emnist_path = '/home/Documents/TestApps/keras/emnist/' X_train = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-train-images-idx3-ubyte') y_train = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-train-labels-idx1-ubyte') X_test = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-test-images-idx3-ubyte') y_test = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-test-labels-idx1-ubyte') X_train = np.reshape(X_train, (X_train.shape[0], 28, 28, 1)) X_test = np.reshape(X_test, (X_test.shape[0], 28, 28, 1)) print(X_train.shape, y_train.shape, X_test.shape, y_test.shape, len(emnist_labels)) k = 10 X_train = X_train[:X_train.shape[0] // k] y_train = y_train[:y_train.shape[0] // k] X_test = X_test[:X_test.shape[0] // k] y_test = y_test[:y_test.shape[0] // k] #Normalize X_train = X_train.astype(np.float32) X_train /= 255.0 X_test = X_test.astype(np.float32) X_test /= 255.0 x_train_cat = keras.utils.to_categorical(y_train, len(emnist_labels)) y_test_cat = keras.utils.to_categorical(y_test, len(emnist_labels))

We have prepared two sets, for training and validation. The symbols themselves are ordinary arrays that are easy to display:

We also use only 1/10 of the dataset for training (parameter k), otherwise the process will take at least 10 hours.

We start the network training, at the end of the process we save the trained model to disk.

# Set a learning rate reduction learning_rate_reduction = keras.callbacks.ReduceLROnPlateau(monitor='val_accuracy', patience=3, verbose=1, factor=0.5, min_lr=0.00001) model.fit(X_train, x_train_cat, validation_data=(X_test, y_test_cat), callbacks=[learning_rate_reduction], batch_size=64, epochs=30) model.save('emnist_letters.h5')

The training process itself takes about half an hour:

This needs to be done only once, then we will use the already saved model file. When the training is over, everything is ready, you can recognize the text.

Identification

For recognition, we load the model and call the predict_classes function.

model = keras.models.load_model('emnist_letters.h5') def emnist_predict_img(model, img): img_arr = np.expand_dims(img, axis=0) img_arr = 1 - img_arr/255.0 img_arr[0] = np.rot90(img_arr[0], 3) img_arr[0] = np.fliplr(img_arr[0]) img_arr = img_arr.reshape((1, 28, 28, 1)) predict = model.predict([img_arr]) result = np.argmax(predict, axis=1) return chr(emnist_labels[result[0]]) As it turned out, the images in the dataset were initially rotated, so we have to rotate the image before recognition.

The final function, which takes an image file as input and a string as output, takes only 10 lines of code:

def img_to_str(model: Any, image_file: str): letters = letters_extract(image_file) s_out="" for i in range(len(letters)): dn = letters[i+1][0] - letters[i][0] - letters[i][1] if i < len(letters) - 1 else 0 s_out += emnist_predict_img(model, letters[i][2]) if (dn > letters[i][1]/4): s_out += ' ' return s_out

Here we use the previously saved character width to add spaces if the gap between letters is more than 1/4 of a character.

Usage example:

model = keras.models.load_model('emnist_letters.h5') s_out = img_to_str(model, "hello_world.png") print(s_out) Result:

A funny feature - the neural network "mixed up" the letter "O" and the number "0", which, however, is not surprising because The original EMNIST set contains handwritten letters and numbers that do not quite look like printed ones. Ideally, to recognize screen texts, you need to prepare a separate set based on screen fonts, and train the neural network on it.

Ideally, to recognize screen texts, you need to prepare a separate set based on screen fonts, and train the neural network on it.

Conclusion

As you can see, pots are not fired by gods, and what once seemed like “magic” is done quite easily with the help of modern libraries.

Since Python is cross-platform, the code will work everywhere, on Windows, Linux and OSX. It seems that Keras is also ported to iOS/Android, so theoretically, the trained model can be used on mobile devices as well.

For those who want to experiment on their own, the source code is under the spoiler.

keras_emnist.py

# Code source: [email protected] import os # Force CPU # os.environ["CUDA_VISIBLE_DEVICES"] = "-1" # debug messages # 0 = all messages are logged (default behavior) # 1 = INFO messages are not printed # 2 = INFO and WARNING messages are not printed # 3 = INFO, WARNING, and ERROR messages are not printed os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3' import cv2 import imghdr import numpy as np import pathlib from tensorflow import keras from keras.models import Sequential from keras import optimizers from keras.layers import Convolution2D, MaxPooling2D, Dropout, Flatten, Dense, Reshape, LSTM, BatchNormalization from keras.optimizers import SGD, RMSprop, Adam from keras import backend as K from keras.constraints import maxnorm import tensorflow as tf from scipy import io as spio import idx2numpy # sudo pip3 install idx2numpy from matplotlib import pyplot as plt from typing import * import time # dataset: # https://www.nist.gov/node/1298471/emnist-dataset # https://www.itl.nist.gov/iaui/vip/cs_links/EMNIST/gzip.zip def cnn_print_digit(d): print(d.shape) for x in range(28): s="" for y in range(28): s += "{0:.1f} ".format(d[28*y + x]) print(s) def cnn_print_digit_2d(d): print(d.shape) for y in range(d.shape[0]): s="" for x in range(d.

shape[1]): s += "{0:.1f} ".format(d[x][y]) print(s) emnist_labels = [48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 97, 98, 99, 100 , 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122] def emnist_model(): model = Sequential() model.add(Convolution2D(filters=32, kernel_size=(3, 3), padding='valid', input_shape=(28, 28, 1), activation='relu')) model.add(Convolution2D(filters=64, kernel_size=(3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(512, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(len(emnist_labels), activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adadelta', metrics=['accuracy']) return model def emnist_model2(): model = Sequential() # In Keras there are two options for padding: same or valid.

Same means we pad with the number on the edge and valid means no padding. model.add(Convolution2D(filters=32, kernel_size=(3, 3), activation='relu', padding='same', input_shape=(28, 28, 1))) model.add(MaxPooling2D((2, 2))) model.add(Convolution2D(64, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D((2, 2))) model.add(Convolution2D(128, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D((2, 2))) # model.add(Conv2D(128, (3, 3), activation='relu', padding='same')) #model.add(MaxPooling2D((2, 2))) ##model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(512, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(len(emnist_labels), activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adadelta', metrics=['accuracy']) return model def emnist_model3(): model = Sequential() model.add(Convolution2D(filters=32, kernel_size=(3, 3), padding='same', input_shape=(28, 28, 1), activation='relu')) model.

add(Convolution2D(filters=32, kernel_size=(3, 3), padding='same', activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Convolution2D(filters=64, kernel_size=(3, 3), padding='same', activation='relu')) model.add(Convolution2D(filters=64, kernel_size=(3, 3), padding='same', activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(512, activation="relu")) model.add(Dropout(0.5)) model.add(Dense(len(emnist_labels), activation="softmax")) model.compile(loss='categorical_crossentropy', optimizer=RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0), metrics=['accuracy']) return model def emnist_train(model): t_start = time.time() emnist_path = 'D:\\Temp\\1\\' X_train = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-train-images-idx3-ubyte') y_train = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-train-labels-idx1-ubyte') X_test = idx2numpy.

convert_from_file(emnist_path + 'emnist-byclass-test-images-idx3-ubyte') y_test = idx2numpy.convert_from_file(emnist_path + 'emnist-byclass-test-labels-idx1-ubyte') X_train = np.reshape(X_train, (X_train.shape[0], 28, 28, 1)) X_test = np.reshape(X_test, (X_test.shape[0], 28, 28, 1)) print(X_train.shape, y_train.shape, X_test.shape, y_test.shape, len(emnist_labels)) #Test: k = 10 X_train = X_train[:X_train.shape[0] // k] y_train = y_train[:y_train.shape[0] // k] X_test = X_test[:X_test.shape[0] // k] y_test = y_test[:y_test.shape[0] // k] #Normalize X_train = X_train.astype(np.float32) X_train /= 255.0 X_test = X_test.astype(np.float32) X_test /= 255.0 x_train_cat = keras.utils.to_categorical(y_train, len(emnist_labels)) y_test_cat = keras.utils.to_categorical(y_test, len(emnist_labels)) # Set a learning rate reduction learning_rate_reduction = keras.callbacks.ReduceLROnPlateau(monitor='val_accuracy', patience=3, verbose=1, factor=0.

5, min_lr=0.00001) model.fit(X_train, x_train_cat, validation_data=(X_test, y_test_cat), callbacks=[learning_rate_reduction], batch_size=64, epochs=30) print("Training done, dT:", time.time() - t_start) def emnist_predict(model, image_file): img = keras.preprocessing.image.load_img(image_file, target_size=(28, 28), color_mode='grayscale') emnist_predict_img(model, img) def emnist_predict_img(model, img): img_arr = np.expand_dims(img, axis=0) img_arr = 1 - img_arr/255.0 img_arr[0] = np.rot90(img_arr[0], 3) img_arr[0] = np.fliplr(img_arr[0]) img_arr = img_arr.reshape((1, 28, 28, 1)) predict = model.predict([img_arr]) result = np.argmax(predict, axis=1) return chr(emnist_labels[result[0]]) def letters_extract(image_file: str, out_size=28): img = cv2.imread(image_file) gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY) img_erode = cv2.erode(thresh, np.ones((3, 3), np.uint8), iterations=1) # Get contours contours, hierarchy = cv2.

findContours(img_erode, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE) output = img.copy() letters = [] for idx, contour in enumerate(contours): (x, y, w, h) = cv2.boundingRect(contour) # print("R", idx, x, y, w, h, cv2.contourArea(contour), hierarchy[0][idx]) # hierarchy[i][0]: the index of the next contour of the same level # hierarchy[i][1]: the index of the previous contour of the same level # hierarchy[i][2]: the index of the first child # hierarchy[i][3]: the index of the parent if hierarchy[0][idx][3] == 0: cv2.rectangle(output, (x, y), (x + w, y + h), (70, 0, 0), 1) letter_crop = gray[y:y + h, x:x + w] # print(letter_crop. shape) # Resize letter canvas to square size_max = max(w, h) letter_square = 255 * np.ones(shape=[size_max, size_max], dtype=np.uint8) if w > h: # Enlarge image top-bottom # ------ # ====== # ------ y_pos = size_max//2 - h//2 letter_square[y_pos:y_pos + h, 0:w] = letter_crop elif w < h: # Enlarge image left-right # --||-- x_pos = size_max//2 - w//2 letter_square[0:h, x_pos:x_pos + w] = letter_crop else: letter_square = letter_crop # Resize letter to 28x28 and add letter and its X-coordinate letters.

append((x, w, cv2.resize(letter_square, (out_size, out_size), interpolation=cv2.INTER_AREA))) # Sort array in place by X-coordinate letters.sort(key=lambda x: x[0], reverse=False) # cv2.imshow("Input", img) # # cv2.imshow("Gray", thresh) # cv2.imshow("Enlarged", img_erode) # cv2.imshow("output", output) # cv2.imshow("0", letters[0][2]) # cv2.imshow("1", letters[1][2]) # cv2.imshow("2", letters[2][2]) # cv2.imshow("3", letters[3][2]) # cv2.imshow("4", letters[4][2]) # cv2.waitKey(0) return letters def img_to_str(model: Any, image_file: str): letters = letters_extract(image_file) s_out="" for i in range(len(letters)): dn = letters[i+1][0] - letters[i][0] - letters[i][1] if i < len(letters) - 1 else 0 s_out += emnist_predict_img(model, letters[i][2]) if (dn > letters[i][1]/4): s_out += ' ' return s_out if __name__ == "__main__": # model = emnist_model() # emnist_train(model) #model.

save('emnist_letters.h5') model = keras.models.load_model('emnist_letters.h5') s_out = img_to_str(model, "hello_world.png") print(s_out)

As usual, good luck with your experiments.

Knows letters but cannot read | Family

Clever

2 subscribers

09/05/2022

14

The main problem in teaching reading!

Even if the baby knows all the letters and confidently points at them with his finger, it can be very difficult to make a word out of them. Often this process frankly stalls and drags on for years.

Why? Some facts: Studies show that knowledge of letters is not directly related to the ability to read. White matter in the temporo-parietal region of the brain of the left hemisphere is responsible for reading skills, and until it is not enough, the child will not be able to read (Ch. Myers, M. Vandermosten , 2014 d)

Forcing a child to learn letters is harmful . Psychologists say that if the process “didn’t start,” then it’s impossible to forcefully train, since the parts of the brain that are responsible for letter recognition might not have matured yet.

Psychologists say that if the process “didn’t start,” then it’s impossible to forcefully train, since the parts of the brain that are responsible for letter recognition might not have matured yet.

Spell learning slows down reading speed . If the eye wanders from letter to letter, each of these signs becomes a fixation point, and the reading is stumbling, the pace is slow.

In general, you have already guessed that we at “Umnitsa” do not recommend purposefully learning letters with a child. This can be done in a game format, when both of you are having fun and interesting. But you should not expect that this process will somehow start reading. By the way, the classic method of teaching reading at school is also based on memorizing individual letters. And remember your class - with what difficulty many children broke through this jungle.

So it's better to teach reading BEFORE school, but only in the correct, playful way :

Without memorization. In the game! In one where you don’t have to learn anything, you can pick up the tools for classes, twist, compose words on your own and immediately read them. Even if the child didn’t know the letters before.

In the game! In one where you don’t have to learn anything, you can pick up the tools for classes, twist, compose words on your own and immediately read them. Even if the child didn’t know the letters before.

This is how your little one can learn to read with our extraordinary Clever® bricks. Easy to read (3+) Already in the first lesson, he will be able to read his first word. Because he will understand the principle!

Select size

The server is temporarily unavailable. Please try later than

-

We and without “development” grew normal

19.11.2022

41

-

New Year's gift to lovers of stickers

02.12.2022

40

9000 and children

12/02/2022

49

-

ONE YEAR CRISIS

12/13/2022

7

31.10.2022

86

2

-

New Year's Gift 3th Lettu

23.11.2022

137

1

-

NeUROGRESS for child development - Real benefits or marketeers of 9,00066, 9000, 9,0006, 2022

365

1

-

Hit for learning to count!

11/19/2022

31

-

Toys for a child: what to choose and how much?

06.

12.2022

12.2022 28

-

Which game applications are suitable for children and which are not

14.12.2022

11

6

-

What errors are made by mothers and dads when learning

11.111. 2022

142

2

-

How to become a better parent?

28.10.2022

27

-

How to tell a child that he will soon have a brother or sister?

22.11.2022

15

-

7 tips how to cope with two children

12.12.2022

16,0004 3

-

REMOMOMER in the children's room: what to choose and how to measure the child’s growth

9000 03000 03000 03000 03000 03000 03000 03000 03000 03000 03000 03000 03000 9000 03000 9000 03000 9000 03000 03000 9000 03000 9000 03000 03000 9000 03000 03000 03000 .2022112

1

-

How does color affect a child's perception of the surrounding world?

11/22/2022

30

-

USE 2023 assignments in English

11/13/2022

102

8

-

Children's emotions: why are they so important?

17.

10.2022

10.2022 9000

2

-

New Year's gift to the baby who goes to kindergarten

01.12.2022

128

1

-

We learn to remove the toys

9000 1112

-

Who invented the Pickler triangle?

11/07/2022

79

2

-

What can be done when the child is always “bored”

01.11.2022

152

1

-

We master mathematics: the program “Mathematical steps” with children 6 - 7 years

0004 01.11.2022

87

6

-

Tasks to click, like nuts

14.12.2022

10

4

-

poor sleep in a child due to mental overload: possible consequences

23.10.2022

16

-

At what age should you buy a smartphone for your child and how to keep children safe online?

04.11.2022

87

14

-

Facts on Pop It 1000 Knowledge

11.

12.2022

12.2022 22

199000 19

-

Statter to be worried! Does stress affect the ability to conceive a child?

10/21/2022

65

1

-

Baseboard "Watches and numbers"

10/19/2022

139

-

TOP 5 WHO NEED POP IT - ANTI-STRESS TOY!

01.12.2022

49

22

-

Why do we need anti-stress coloring?

23.10.2022

164

3

-

The principle of operation of the pregnancy test and why it might be mistaken

23.10.2022

36 9000

1

-

Children's headphones - which model to choose

11/01/2022

41

1

-

In anticipation of the New Year... How to make wishes so that they come true!

02.11.2022

139

2

-

Life hack for future mothers. A device that will change your pregnancy.

11/21/2022

60

1

-

PupsVil speech therapy cards: expert reviews

11/13/2022

910 440174

I heard Montessorori Mintessori?

-

useful books for the baby

18.

10.2022

10.2022 71

1

-

Model for children 3-4 years old

22.11.2022

12

-

Why does the child check parents for strength?

10.11.2022

9000

1

-

Gift set for kids "Zaikins Lessons"

19.10.2022

177

1

-

We develop speech with opening books

9000. 70 -

Anti-stress toys. Pop it or not pop it?

10/19/2022

95

5

-

Montessori method for early childhood development

10/24/2022

151

-

What to give the baby 1 - 2 years old for the New Year

22.11.2022

156 9000

1

-

5 Recommendations “How rather to come into form after childbirth”

9000.2022191

3

-

Why should all children have a water mat?

11/08/2022

192

1

-

The best way to watch porn with your soulmate0003

41

-

"What to ask Santa Claus for the New Year?" - Of course, the doctor’s set from Bubble Joyce

13.

11.2022

11.2022 109

-

cards in the Lapochka: We study the letters and sounds of the Russian language, English and German alphabets

23.11.2022

9000 9000 9000 9000 2

-

Sleep without tears: TOP-5 useful tips

09.12.2022

23

1

-

35 Games for dad with child 9000.19000.2022

178

-

What should I do if my child refuses to sleep during the day?

11/23/2022

69

13

-

A good enough mother: 5 steps to the happiness of motherhood

10/31/2022

137

3

-

25.11.2022

86

2

-

Renaissance of the board games in the digital marketing era

01.12.2022

8

-

overalls or a separate set in kindergarten?

11/29/2022

43

2

-

Wooden toys0174

Why are we dissatisfied with the “old school” pediatric doctors

11/21/2022

78

1

-

Interhemispheric boards: what is it and why?

25.

10.2022

10.2022 135

1

-

An interesting gift for a child for the New Year!

23.11.2022

181

-

Classic classics of children's literature

18.11.2022

46

1

-

Why does your child like to draw?

23.11.2022

36

1

-

New Year's gift for stubborn babies and their tired parents

14.12.2022

27

2

-

of coloring for the wall: draw with advantage

.10 20.10 20.10. 2022

315

39

-

First complementary foods: how to understand what can be introduced?

11/19/2022

62

-

Hans Christian Andersen: about phobias and the writer's books

11.12.2022

7

-

Travels with children

28.10.2022

73

-

Choose a New Year's gift to a child from 3 to 7 years old

9000.20229000 9000 9000 9000 9000 9000 9000 9000 9000 9000 9000 9000 9000 9000 9000.

000

000 This article contains information that is not intended for viewing by persons under the age of 18

-

3 simple ideas for organizing a children's room according to the Montessori method

03.11.2022

317

4

-

How to calm a child before bedtime

19.10.2022

100 9000

10/24/2022

24

-

How parents learn to control their emotions0021

21.10.2022

105

1

-

Can a child with a runny nose be in kindergarten?

14.11.2022

137

2

-

The benefits of modeling

22.11.2022

11

1

-

to sleep with a child or separately

1 9000

-

How to wean a baby off a pacifier

10/21/2022

69GAMES FOR CHILDREN ON A RAINY DAY Why modern residents can not do without orthopedic products? Part 2

12.12.2022

5

-

about deadlines and burnout: when you need to work in a short time

29.

77

7

7

7 9017E

Do children need to believe in Santa Claus?

01.11.2022

1